So now that asynchronous stuff is on the horizon, there’s going to need to be some conventions around dealing with this problem.

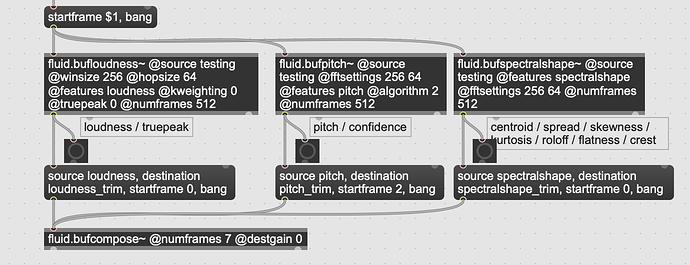

For example, things like this:

…won’t work in a “traditionally Max-y” manner. In that I can’t count on the left-most bang letting me know the process has finished.

So ok, I can use something like buddy instead, and when all three (or N) things have banged, I am good to go, right?

BUT once there’s a FIFO system, that no longer becomes the case. So if I query two of these things in a row, the fluid.bufloudness~ process might finish both before the fluid.bufspectralshape~ has finished its first. So one would need to keep track of outputs and stick them back together in the order that they arrived.

Not a massive problem by any stretch, but one that will become the “norm” for querying for multiple descriptor types and the corresponding plumbing around that (i.e. fluid.bufcompose~-ing a temp analysis buffer and then fluid.bufstats~-ing that).

In talking to @weefuzzy about this yesterday, he suggested some kind of abstraction for this, but I can see it being problematic for an infinite amount of stacked querries as well as things one is querying (which almost seems like you’d need a FIFO per stack).

Now in my example above, I can just make the whole process serial, and create a single processing chain. It would mean needing 3x fluid.bufcompose~ which isn’t the end of the world, but it’s not difficult to imagine a version of that patch that is many more steps and or things it is querying for, making a serial approach not feasible. Also becomes tricky in a FIFO context if some things taking longer than others.

/////////////////////////////////////////////////////////////////////////////////////////////////////////////

There are also parallel concerns for objects that output buffers in a FIFO context, in that there needs to be a system to manage that as well.

Creating a dynamically growing buffer as the output of a FIFO stack is a problem as it can grow to an infinite amount. At the same time, if you do want all those outputs, you can make them and stack your FIFO requests with different [numDestOutBuffPlaces](http://discourse.flucoma.org/t/naming-numing-conventions/202) arguments.

A different problem, but definitely interrelated with the (a)synchronicity problem that is in the more immediate future.