very much looking forward to your findings

me too to be honest. There are so many variables I am slightly overwhelmed. I know that the last time I tried orchidea I did not get good result on anything that was not as pitch-driven as both your examples, but I’ll give it a try once I can compare alikes.

the voice examples from yesterday’s video are not so much pitch driven

Hi @tutschku,

Turns out I needed the Jasch objects as well. There’s still one object I can’t find though, called [recursivefolder]. Is this one of yours?

Edit: Ah, ok. It’s one of Alex’s. Sorry for the noise

actually the distances are using our KDtree

Hi @tremblap, sorry for the delay,

I haven’t coded the descriptors in orchidea myself, but I’m sure they do not rely on ircamdescriptors, the code is very lightweighted and I think Carmine rewrote them from scratch. I think Carmine is going to release the sources on github at some point, so you will also be able to doublecheck directly.

As for the distances in dada, if you refer to dada.distances, indeed that is multidimensional scaling using the simpmatrix library, as @jamesbradbury said. If you mean instead how do we measure the distances for knn, for the time being it’s just euclidean distance, but you can assign weights to each dimension to compensate for differences of magnitude (e.g. knn 4 [400 6000] [1. 0.1]) That’s all manual, and I really wouldn’t take my knn handling as a reference, it’s very naively coded.

@danieleghisi thanks for the update! Github is a good news for us (@weefuzzy and @groma). And distance @tutschku used were in effect the flucoma ones on all settings of mfccs so that simplified comparisons.

The idea of weighting by scaling is a good one indeed, I will get thinking about those indeed too.

Not to send you down a rabbit hole, but Dan Ellis’s stuff can also be found here:

Written in Java in 2007-8. All the DSP was Dan’s.

So to kind of bump this here as it’s starting to overlap with some of the explorations I’ve been doing in the time travel thread.

Based on some super helpful input from @jamesbradbury I was starting to do some more qualitative testing where I would play the target, and listen back to the nearest matches, very similar to what @tutschku was doing in this thread.

I went through and pulled out the bit from @tutschku’s patch above that does the Orchidea MFCCs and painfully parsed it into a fluid.dataset~ (I’m not a big bach user, so this involved pasting things into a spreadsheet app, and dumping things through two colls etc…) and made a short video comparing the results.

So this is using my tiny analysis windows where for FluCoMa stuff the fft setting are 256 64 512 and for Orchidea it’s just 256 64 as I don’t know how to do oversampling there. Also don’t know how it handles hops or anything like that either, so that might be something to explore/unpack, but even with that, the match accuracy is on par with what @tutschku was getting. So I’m inclined to believe most of that magic is happening in how the MFCCs are being processed, rather than which frames I’m grabbing.

So here’s the video:

I mention it in the video, but this is showing kind of ‘best case’ performance from the FluCoMa stuff. I’ve done loads of different permutations (as can be seen in the other thread), but all of them are roughly in this ballpark. For this video I’m using 20MFCCs, ignoring the first, then adding loudness and pitch. I then take mean, std, min, max for everything, including one derivative. And for the Orchidea I’m taking only the 20MFCCs, nothing else.

So yeah, the results are really striking. Particularly in the overall clustering. This is a testing set of around 800 hits, so big, but not gigantic, and you can really hear how well it handles all 4 neighbors. The FluCoMa stuff does pretty well (though not great) for the nearest match, and maybe two, but the overall “space” that it’s finding isn’t as well defined as with the Orchidea MFCCs.

I’m wondering now if part of the “energy weighted average” that @weefuzzy was wondering about is like what @b.hackbarth has been doing in AudioGuide, where the MFCCs are just weighted by loudness in each frame (perhaps also combined with some standardization across the whole set, rather than per-sample), which is producing better overall results here.

Either way, wanted to bump this as I’m now seeing how accurate/useful this is here, and how to best try to leverage whatever “secret sauce” is going on underneath to get similar results in the fluid.verse~.

To play devil’s advocate I think they just fail differently and the consistency is about the same. I think it would be worth knowing what the implementation fo MFCC is (dct type, liftering, weighting). Did we ever find that out @tutschku @tremblap

To my ear the Orchidea ones were way more consistent in terms of overall timbre and loudness. The ‘worst’ example from that one was way better than the worst from the native flucoma ones, and same goes for the other end of the spectrum.

More so, the ballpark was a bit more consistent. Some of the flucoma ones sounded like a collection of 5 random samples.

It’s also worth noting that this was just MFCCs, no (additional) stats, proper loudness measurement, pitch, derivatives, etc… So presumably, things could improve from there.

I think @weefuzzy was doing some casual spelunking (as per his info above), but from memory there was nothing conclusive. Or do you mean on the native flucoma versions?

Oh, as a more concrete comparison, I ran the flucoma version through the same exact process as I did the Orchidea ones. In the video example above the flucoma tools were going through the real-time onset detection process, which I’ve tried to get exactly the same for offline and real-time, but there is a biquad~ step in the real-time version which isn’t present in the offline, hence sometimes finding a nearest match which isn’t itself (due to it being offset a sample or two).

So if I do what I’ve done with the Orchidea example and load numbers, and then query based on only numbers, I always get the single point returned back (as one would expect), but the overall matching and clustering isn’t much improved. By the time we get to the 3rd and 4th nearest, it gets very outlier-y.

(I can post a video, but it’s basically the same overall matching as the first video, except the nearest match is always the same now)

@tutschku and/or @rodrigo.constanzo do you have a short-ish dataset to share that I can compare weighted and unweighted descriptors? @tutschku you had an example in which the weighting was quite clearly improving in your films, but isn’t that part of a huge dataset?

Here’s one of the ones I was using in the video. It’s 800 files, but all 100ms long, so short and sweet.

(they are all loads of diff hits of different types and preparations on the drums, so they can be chained for qualitative assessment ala the “Hans method”).

DrumHits.zip (5.5 MB)

@rodrigo.constanzo Do you have a particular one that misbehaves?

@tutschku what stats did you use in your patch again?

You can see/hear some examples in the video, but it looks like I made note of these in my patches.

edit:

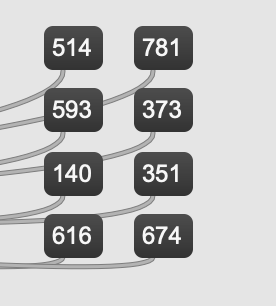

These are alphabetically, when loading all 800 into a polybuffer, so 400 from the testing set and 400 from the training set (in that order). So #514 = 114 from the training set, etc…

I have the patch and data that @tutschku sent me on my other machine, I’ll pass it to you tomorrow.

Quick question: you are looking in the whole 800 for the match, or just the 400 training set?