hi @tedmoore,

what i try soon is to automate the process a bit more to let the thing run and check at the end many models and their error plots

lets say having a dataset and specify some different model params (layers, activations etc.) and hyperparams to train,

then train models on all possibilities, plot each outcome and save all models

this is what wandb can be usefull (but having no idea how to bring this into flucomaland, but as a general working pipeline idea maybe interesting)

to do this in a loop/routine, the exit has to work, so early stopping needs to be considered and i make some tests using

@tremblap ideas on this from above…

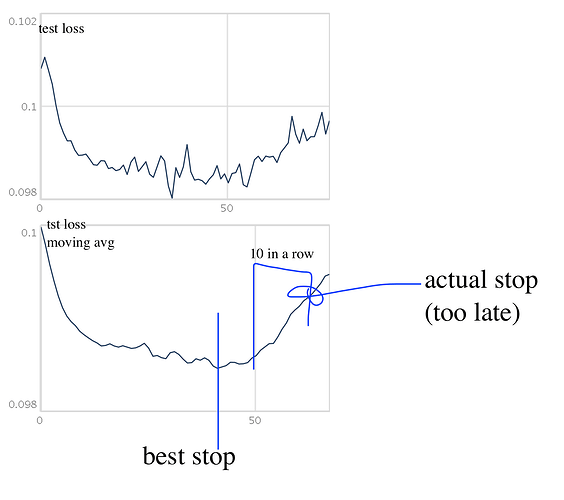

also tried to build the algo in sclang (add every test-loss to the list (size10) until it is 10 in a row going up → kill), which is hardly stopping (cause of the noisy tst loss),

smoothing it (using moving avg) can cause stopping too early (local min) or it can be too late (see the pic atached as an example… )

well…

if i come up with something useful i let you know! thanks