FWIW @a.harker’s skepticism (as @jamesbradbury put it), resonates with me. Especially when you watch the interpolation happen and it looks like interpolation (the sliders all just move in the correct direction as one would expect). Which isn’t to say that MLPs are not useful, they’re super useful for doing this. And there are differences from interpolators that are really beneficial, like being able to train a neural network differently to create a more or less “bumpy” or “wiggly” mapping through a space (more or less fit or overfit), more or less precise to the input points you gave it.

Regarding the input controls and output controls

I think there’s a fourth here which is the sonic space, as very different synth controls could produce very similar sounds, so there’s another mapping translation going on.

input controls → model parameters → synth controls → sonic space

As @tutschku was saying big auditory changes for who? The audience probably perceives noisy synth as noisy synth, probably not that different. And how small is a motion? One centimeter on the iPad? One inch? I’m making a silly comparison here, but the point is they are all small compared to having to grab and turn 50 knobs at once, per:

If one is trying to make small motions create big sound differences, there isn’t much difference between moving something a few millimeters and just hitting (and jumping to) a different preset button. With an MLP of course one can wiggle their way to that preset, but not much if the distance to it is really small!

//========================

One thing I keep coming back to in this conversation is the organization of the control space (in Hans’ example where in the 2D space should the dot go for the straight line vs. the bell shape?). We keep, essentially, just making this up: this sound maybe goes over here, this one maybe goes over there… but what if there was a way to intelligently organize that space as part of the training? Probably to do this:

But also, it could be any optimization. This would essentially be dimensionality reduction, an autoencoder could be good.

Because the relationship between input controls and output sounds needs to be learned by the performer with each mapping anyway, there’s no real skin off my back in putting this optimization in, since the learning process will be the same.

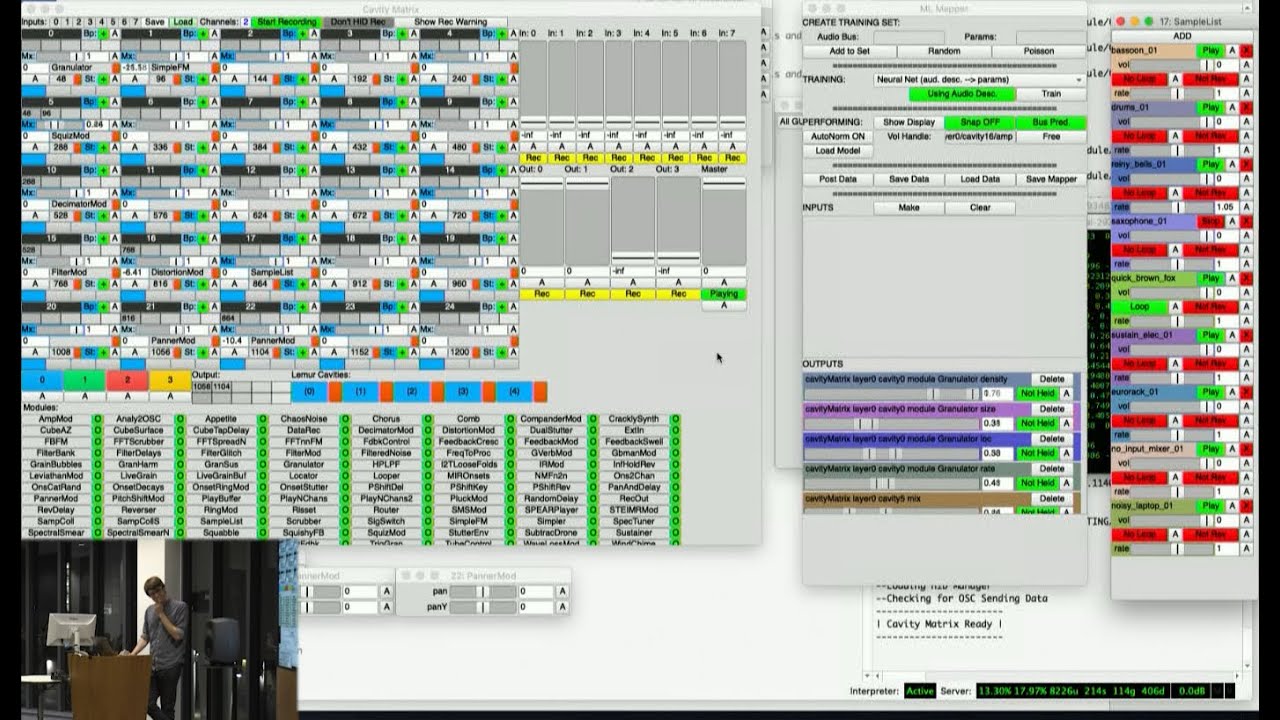

This is what I was getting at here by taking auditory descriptors of the synthesis parameters that I wanted in my dataset and then using dimensionality reduction on those auditory descriptors to try to organize my control input space less arbitrarily:

It wouldn’t always be necessary or interesting to do this but there are situations where I think it would make the whole idea of MLP mapping / interpolating more powerful/intuitive/useful/robust, something in this direction.