Hey there.

Someone here already patched a dynamic way to change the descriptors for the x and y axis of a corpus like it can be done in catart… and is willing to share some ideas, recommendations or even max-patches?

Peace, johannes

Hey there.

Someone here already patched a dynamic way to change the descriptors for the x and y axis of a corpus like it can be done in catart… and is willing to share some ideas, recommendations or even max-patches?

Peace, johannes

not already patched, no, but if you do so, a single large analysis (like catart) that is pruned (via datasetquery) to as smaller one on which a kdtree is built is the way to go. I think that you might find something like this under the hood of @rodrigo.constanzo fantastic sp stuff…

Thanks pierre, yes i already peaked into @rodrigo.constanzo library but didn’t really knew what i actually looking for. I expect a gui that let me select the descriptors… ![]() .

.

maybe a good time to look at the whole analysis flucoma stuff in more detail.

There’s nothing that lets you explicitly do that. I have personally never found that approach too useful I have to say, but you can just take any amount of descriptors you want to analyze then stuff them into a single dataset. Then from there use fluid.datasetquery~ to “poke” out the two you want, and then fit a fluid.kdtree~ with that.

The syntax for fluid.datasetquery~ is a little terse to do something like that, but once you get it going, it’s easy to repeat with different columns or whatever.

There is a lot of fun there to have… but it is not premade so you have to take many decisions. Now if you want CataRT you should use it, it is a fantastic tool to learn about all of this.

If you want to re-code it, then you can start from the patch of the beginner tutorial (2d browser) and add all the descriptors you want to use, then select them. There are a few ways to do that. I have found fun to put them in different datasets (one for spectral shape’s 7 dimensions, one for pitch and loudless, one for chroma (12d) one for mfcc (13 or 20). Then you can use datasetquery to merge the dimensions you want.

This allows you to have whatever resolution, stats, time bundling, that you care about for each descriptor. @rodrigo.constanzo and @balintlaczko (and myself) have used very different combinations of these…

If any of what I write here sounds cryptic, then let’s continue to talk. If you tell us what CataRT is not doing for you already, then it will be easier to know how we can help.

Happy coding!

thanks for the feedback guys, it keeps me going…

can you please elaborate what you mean with merge and how it effect resolution etc of the descriptors…

regarding catart.

sure, it is very nice, I learned about it at a ftm workshop back in 2011. but now that I was off the patches for about 8 years and unlock the catart patches, Its just a hall full of spaghettis to me, where its hard to find a start and end… so not the best ground to expand on it or include own ideas, at least for me.

as @brookt I had the idea to play multiple neighbours at once to get polyphony from the corpus. This has great potential in my eyes, because it can create complex sounds and strongly depends on the the audiomaterial and the chosen descriptors. something I definitely have to experiment more with. iam just about to patch this in combination with framelib, wich I currently learning as well.

I am also would like to try:

Playing a high number of neighbours (with each grain looping ) and using their distance to the query to control amplitude and a lowpass filter. so neighbours further away from query have a lower level and sounds more dull/ more lowpass filtered. not sure about the params yet. but using the distance to change neighbour sounds is definitely a thing I will follow.

@rodrigo.constanzo you might be right about the switching descriptor stuff… when you find a matching pair or even more descriptors that fit your corpus you probably stick to it. but as I don’t know which descriptor is good for certain folders of my sound library yet I would like to try them and see how they effect the corpus. specially for the multi neighbour stuff I guess this could be quite interesting…

looking forward to learn more about flucoma.

What I think @tremblap is saying here is to analyze each “type” of thing you want, with whatever settings/time frame, you want (e.g. maybe bigger FFT sizes for MFCC stuff, but smaller ones for loudness, etc…), then when you want to pick what to use, you pick out the individual dimensions and “merge” them into a single dataset to fit with. (e.g. “centroid” from the spectral shape thing, “pitch” from the pitch thing, then create a new dataset that has two dimensions, “centroid” and “pitch”).

Totally, though it’s more the point that 2 dimensions is generally not very useful in and of itself. Even if you do something like loudness/centroid (which would be my two choices), that’s still quite thin in terms of spreading data out. Once you get into pitch, or other spectral descriptors, or MFCCs even, you’re looking at 20-30+ dimensions before you even start getting too deep. For example, in SP-Tools, for the classification stuff I use MFCCs+stats which gives me 104 dimensions. And that’s only MFCCs and some related statistics (mean, min/max, and standard deviation).

I hear you - I had to learn it when I worked with it and modify it for Sandbox#3 - 6 months and the trauma was the source of getting the FluCoMa project going (slowly ![]() ) - but I learnt SO MUCH form the content and the coding of CataRT!

) - but I learnt SO MUCH form the content and the coding of CataRT!

Indeed @rodrigo.constanzo knows me well… but considering his major contribution to the project, that wouldn’t be surprising ![]()

Ah damn… i messed things up here.

I mean thin in terms of amount of information you can see. Loudness, on its own, is very crude, and centroid, on its own, is very crude.

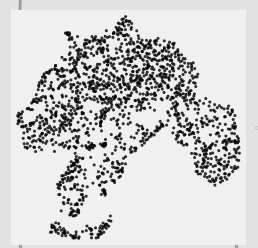

If you specifically want a 2d interface to control with a mouse or something you can also just use dimensionality reduction. I find that to be quite useful in that you can analyze whatever you want (loudness/centroid/pitch/mfccs/etc…) then send it to fluid.umap~ and have it return a 2d space.

Like so:

Neither dimension here is a specific descriptor, but it’s a very browsable space, since things are organized in a manner that makes sense (according to the algorithm).

In terms of what’s better, it depends on what you want to analyze for. I still personally like “perceptual” ones like loudness/pitch/centroid/flatness (which is what the map above is from), but MFCCs are also great for “timbre” more broadly.

@rodrigo.constanzo

Ah i see. Thanks for explaining!