I’ll start with clarifying my intention/desire as the whole kmeans/kdtree/knearestdist/etc… is me just trying to make my way to that. I don’t have a specific interest in computing means etc…

The most standard use case here would be would be to train ~50 hits on the center of the drum, and label that as classA, and then train ~50 hits near the edge of the drum, and label that as classB. I’ve got this part working well and fairly refined.

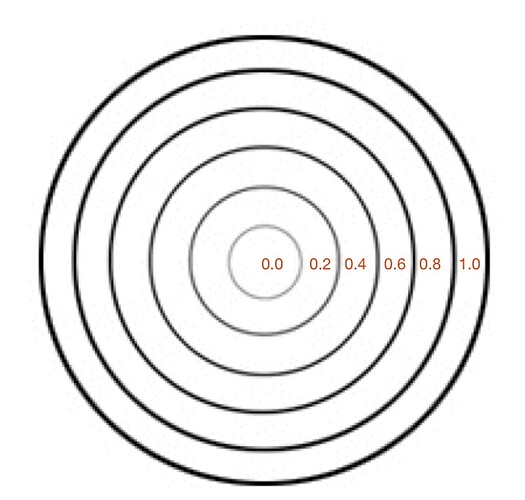

Now what I want to do is hit the drum anywhere and have it, effectively, give me the “radius” from the center where I’ve hit the drum by telling me whether I’m closer to classA or classB. In a really simplistic way, something like this:

So in this case hitting dead center on the drum would tell me that the hit was 100% classA and report a value for that (0.0 in this case), and if I hit near the edge, it would be 100% classB and it would return 1.0.

Or something along the lines of this, with a single value reporting where on the spectrum between classA or classB a new sound is:

This is what I would like to do, as a core use case.

/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

I initially thought I could do this directly in the classifier, as it has a KDTree, and is computing distances etc… so if there’s a way to skip all the kmeans/means/kdtree stuff, I’m all for it!

For the life of me, however, I couldn’t figure out how to do that.

(having a quick look through @jacob.hart’s article on @a.harker’s piece, and it looks like @a.harker made a build of the object that spits out the “hotness” of each of the classes, so I guess the native/vanilla build does not do that (it would be useful as it looks like exactly what I’m looking for here!))

/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

there is. kmeans dump has that for you as you said but you could probably enter your own class in there and “train” for one iteration and it will optimise the centroids. It is worth a try.

Not sure I’m following this part.

In my case I know exactly what the classes should be, so I wouldn’t want fluid.kmeans~ to mess with that at all. I have 50 hits of classA and 50 hits of classB, which I label/train up in fluid.knnclassifer~ (and fluid.mlpclassifier~).

Dear all Someone asked on our GitHub if it was possible for FluidMLPClassifier to provide its confidence vector. The answer is that there is another way: since the MLP code is the same under the hood, one can load a model made in the classifier in the regressor and get access to the output vector, in effect a histogram of how much each class is likely to be the right one. Here it is in Max first - I am using the demo code from the classifier tutorial. I will code the SC version later from the …

Hmm, I think this may do the trick here. Would this also work with fluid.knnclassifier~ + fluid.knnregressor~? At the moment I’m training a knn classifier as the default since it’s a single-click operation and works “fine”, with the MLP one being optional.

edit:

It does not seem to do the trick. Or if it does, I can’t figure out what level of dump to send fluid.knnregressor~ from fluid.knnclassifier~ without it returning an invalid JSON format error.

/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

I imagine this shit has long since sailed, but out of curiosity, why don’t the classifiers spit out this info? I always assumed they did, and I hadn’t really gotten around to making use of it yet. And I would have never thought to do what that thread suggests (dump the classifier into a regressor to get the confidence…).