I was thinking today that it would be cool to be able to record a bunch of (percussive stuff) in a buffer, and then have NMF pre-split a bunch of stuff. Every time I’ve done this with more percussive material, I end up getting multiple versions of the same things (as in, the same onsets, but somewhat deconstructed). I don’t know if this is an intrinsic characteristic of the algorithm where it tries to keep things horizontally coherent, rather than vertically.

Now, I can seed bases, and perhaps give it a bunch of specific sounds/registers (e.g. crotale, kick, rim, etc…), but I feel like I may get better results if I was able to fix the activations instead. So things with specific contours would get clumped together.

Don’t know if I’m overlooking something simple/silly, but I never seem to get satisfying results from NMF unless the material has multiple/simultaneous/consistent drone-y things going on (like the examples in the helpfile).

1 Like

So like:

Here are my exemplars, now go and split this new stuff up according to the exemplars? Would the goal be to unmix some mixed audio?

J

Yeah, the same kind of thing as you normally do/get with NMF (feed it “mixed” audio, and get back streams of it), but privileging temporal synchronicity above other things.

In my case I’m thinking of doing this via activations, but maybe this is an algorithmic parameter that can also be massaged.

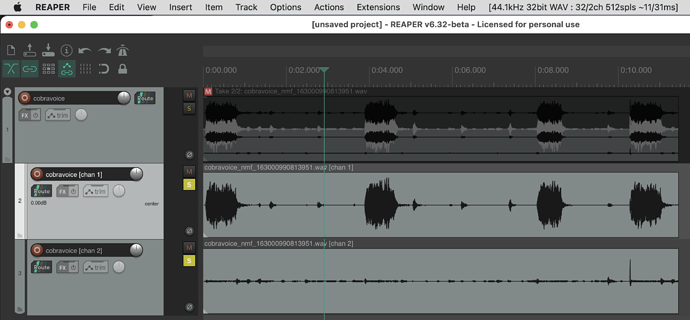

This obviously isn’t percussion, but whenever I’ve NMF’d this file (or things like it), I end up with multiple flavors of the same thing. I never get the different “voices” separated, even though they (perceptually) are quite separate and distinct.

cobravoice.aiff.zip (725.0 KB)

If it’s only short onsets, then NMF is probably surplus to requirements and with some generic slicing you can break apart bits (though it would be good if they were clumped into separate tracks anyways (e.g. all crotale hits together, all snare hits together, etc…)), but I’m also picturing a situation where there are layers of stuff, including percussion and/or long/short percussion stuff mixed together.

I get pretty good separation of distinct musical and sonic elements:

I’m not too sure what you mean by temporal synchronicity either. Do you mean that you want the NMF algorithm to try and keep things together which happen at the same time, regardless of their spectral basis?

By temporal synchronicity I mean that things like the loud scream, would be an “object” that gets shoved into a single track (which looks like you get there). It’s been ages since I try it on this audio specifically, but I remember always getting things like the screams and female voice split across multiple tracks, with similar behavior for any material that had sharp onsets.

Maybe this is a more practical example:

Constanzo-PreparedSnare.wav.zip (3.9 MB)

If I ask for two components with this, it’s essentially a highpass’d and lowpass’d version of the same audio.

I see what you mean the LPF/HPF effect. I’m struggling to understand how you want to nudge the process though. Can you elaborate more?

Again, not knowing what’s happening with the algorithm, but I would picture doing the same thing with activations as you can currently do with bases. So you seed a few contours (in this case, different surface hits, which would have different profiles) and then those would get put together on decomposition.

Even if it’s not perfect, it would hopefully keep more percussive-ish hits “in tact”, as I tend to just get filter dissection of short sounds, rather than proper decomposition.

Maybe there’s also some extra secret sauce that those “automatic stem” processes do for percussion/drums to keep things coherent?