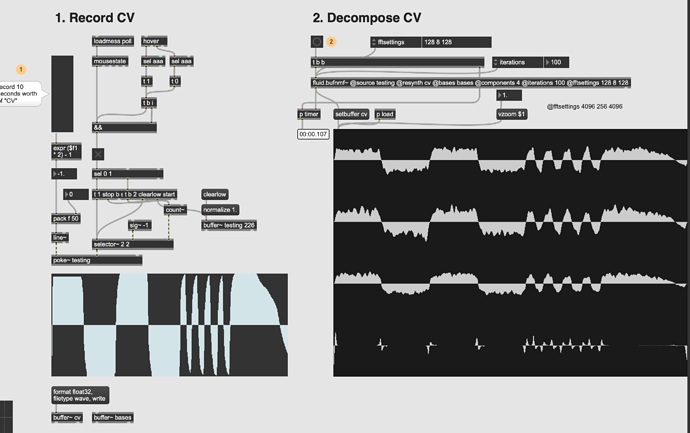

I tried with much smaller fft sizes (@fftsettings 128 8 128) and that works well in terms of latency, but we’re back to square one with regards to gesture separation:

So I understand that you can’t process something bigger than the fft size, since it doesn’t “exist”. But I get lost with the other stuff.

If @fftsettings 4096 256 4096 work well for fluid.bufnmf~ at 32x downsampling, in terms of decomposition, but is too slow (3s) for real-time use. Is it just a matter of finding the middle ground there?

Or can I do something where I decompose at one fft size, and then fluid.bufcompose~ a downsampled @bases buffer to feed into fluid.nmffilter~? Or would that not work?