So as I mentioned in this other thread, been working on a project with Jordie Shier where we’ve been training up a load of NNs to predict longer time series from shorter ones. Basically a much more fleshed out version of some of the stuff I’ve been doing in SP-Tools where you can take a 256 sample window and predict the descriptors for a 4410 sample window.

Long story short, in order for this to work really well, Jordie ended up computing these in Python rather than in Max, and when plotting the loss how well different approach did, it was clear that overfitting was a big issue with the Max approach.

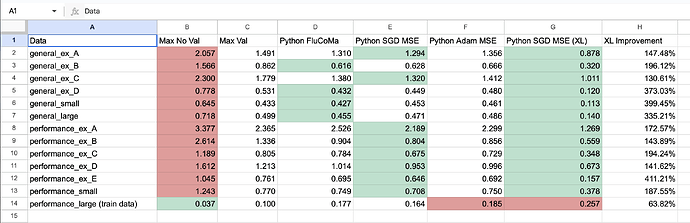

Here’s a spreadsheet showing what’s up:

So the rows are different audio files and example subsets, and the columns are different engines training up NNs. B and C are fluid.mlpregressor~ and D is Jordie’s Python-based implementation of the same code (matches, but not 100% numerically). The biggest difference is in the column G where it’s night-and-day difference.

So all of that is to say that being able to parse the validation loss while iterating through a training set would be game changing on the viability of training up a chonky NN in Max (without making a single large run and pinwheeling Max).

I guess having an option to keep the partitioning across iter calls would be great too, but that just ends up doing some cross-validation which isn’t ideal, but also not terrible. Particular as compared to the danger of overfitting.

I don’t know enough about how the guts of the algorithm are running, but presumably it can just dump a message prepended with validationloss or maybe just loss, in addition to the fit message it sends out.

edit: Also re-read this useful thread where some of this is unpacked, but in the context of SC where I guess getting the validation loss in an ongoing manner is possible.