Ah right. So they would be independent bodies, and you would just have the analysis chain fork down the path (rather than mapping them all onto the same “space”). So if a 3d plot represented “all the possible sounds” I can make with my snare, and I wanted to place each cluster/dataset/corpus onto that space itself.

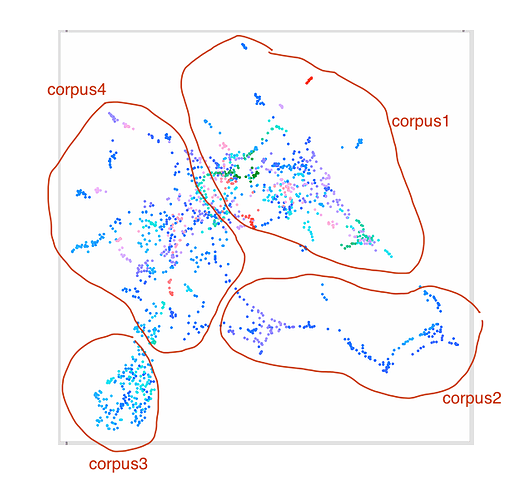

So presuming this plot was my “snare space” and I have 4 clusters/datasets and want to do this:

This is a bit more complicated as each space isn’t a square/rectangle, and could potentially have some funky shapes in the middle.

I guess this kind of thinking or approach could play very nicely with what @tutschku suggested at the last plenary with regards to “drawing a lasso around an area in a dataset”, except instead of deleting the points (I think his desire/point) you could select those and “copy/paste” them into a separate dataset (or cluster).