ok I thought about it overnight, and I’ll try to make it as clear as I can, and feel free to ask for clarification. i have not implemented that yet in real-time, but it is on my todo list as you know for some time now… since the grant app, actually

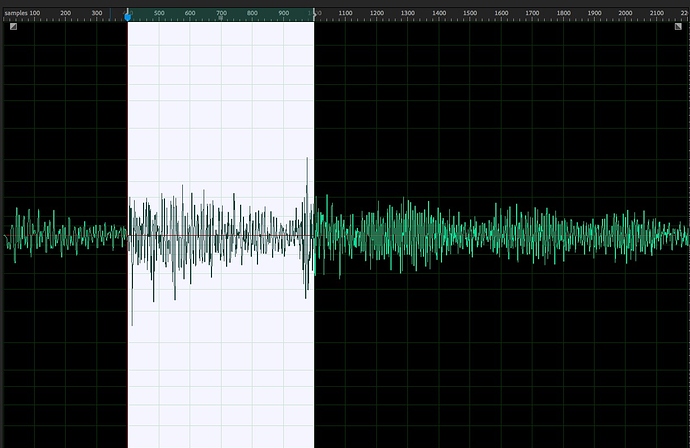

This is a picture of your metal hit from the other thread. I’ve divided time in 3 windows instead of 4, the principle is the same. For now, we’ll consider the start to be perfectly caught so time 0 is 0 in the timeline. I will also consider that the best match answer is immediate for now, because we just need to understand the 3 parallel queries going on. Here is what happens in my model:

-

at time 0 (all numbers in samples), an attack is detected, so the snapshot of the address where we are in my circular buffer is identified. Let’s call it 0 for now as agreed. I send this number in 3 delays~: 400, 1000, and 2200. These numbers are arbitrary and to be explored depending on the LPT ideas you’ve seen in the last plenary, but in effect, they are how you schematise time, not far from ADSR for an envelope. Time groupings. Way too short for me but you want percussive stuff with low latency, so let’s do that. Let’s call them A-B-C.

-

at time 400 (which is the end of my first slot) I will send my matching algorithm the query of 400 samples long from 0 in database A, and will play the result right away from its beginning, aka the beginning of the matching sound.

-

at time 1000, I will send my matching algo the query of 1000 from 0 in database B. When I get the query back, I will play the nearest match from 400 in until its end (1000) so I will play the last 600 samples only. Why? Because I can use the first 400 to bias the search, like a Markov chain, but I won’t play it. Actually, this is where it is fun, is that I would try both settings: search a match for 0-1000 in database B1 and search from 400-1000 in database B2. They will very likely give me different results but which one will be more interesting is depending on the sounds themselves.

-

at time 2200, I will send my query to match either from 0 (C1) from 400 (C2) or from 1000 (C3) again depending on how much I want to weigh the past in my query. That requires a few more databases. Again, I would play from within the sound, where I actually care about my query.

Now, this is potentially fun, but it has a problem:

there will be no sound out between 800 and 1000! If I start to play a 400 long sound at 400, I’ll be done at 800, by which point I won’t be ready for my 2nd analysis at 1000. The same applies between 1600 and 2200. That is ugly.

So what needs to happen is that you need to make sure that your second window is happening during the playback of the first, and the 3rd during the playback of the 2nd. There are 2 solutions to this: you can either play each window for longer, or you can make sure your window settings are overlapping. I would go for the latter, but again there are 2 sub-solutions: that will change how you think your bundling (changing the values to 700/1400/2100 for instance) or with a bit more thinking, you delay the playback of each step to it matches. With the values of 400/1000/2200 you would need to start your first sound at 1200, so it would play

- from A- start at 1200 playing 0-400 (to 1600)

- from B- start at 1600 playing 400-1000 (to 2200)

- from C- start at 2200 playing from 1000 up

so that would need adding 2 cues/delays, one at time 1200 and one at time 1600.

I hope this helps? Obviously, all of this is only problematic in real-time. Sadly, we can’t see in the future. More importantly, the system would need to consider the query time as well before starting to play, since the returning of the best match would never be instant and dependant on database size…

I hope this helps a bit? It at least might help understand why your problem is hard…