I finally got some stuff up and running (with some help from Martin Dupras (is he not on the forum?)).

(copypasta from the video description):

Here’s an early test of something I’m building for my Landscape NOON. The idea is that all the code/hardware will eventually live on a Bela as a standalone “instrument” with some additional components to scale the CV outputs as required.

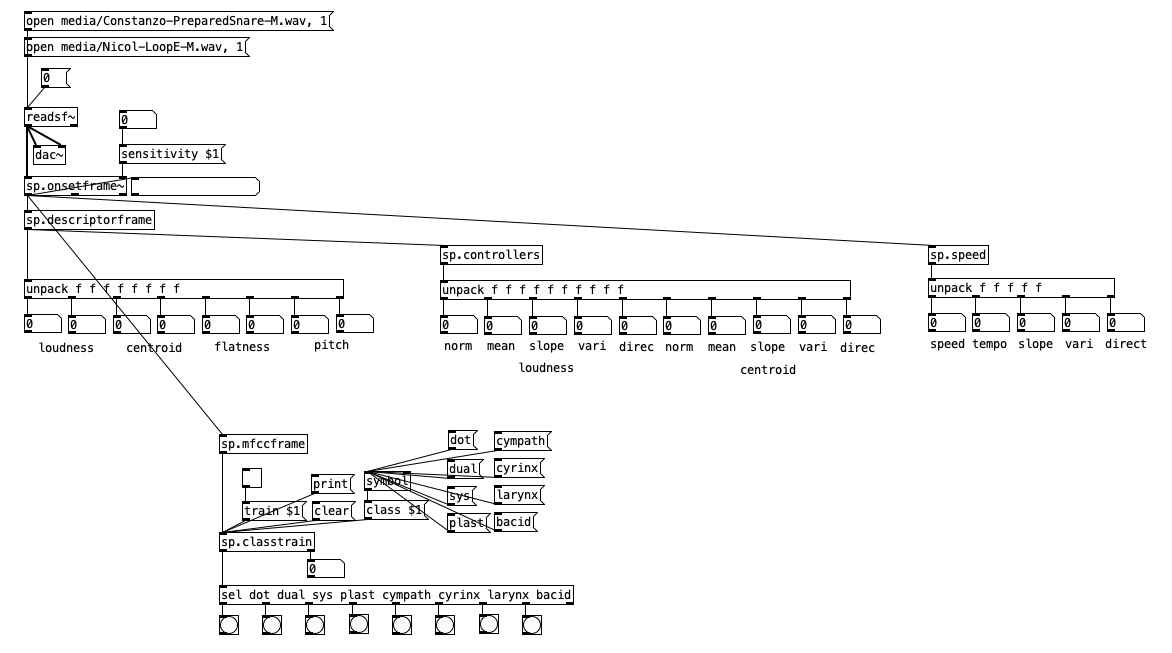

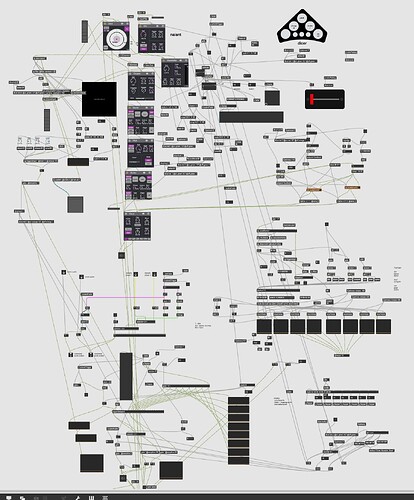

What’s going on in this video is that I’m using machine learning (via FluCoMa + SP-Tools running in Pure Data) to train different classes on the snare (center, “edge”, two crotales, rim tip, and rim shoulder) and then using that to trigger 6 of the voices on the NOON. I’m also using descriptor analysis from each attack to scale the gate length, gate height, and envelope parameters.

I’ve also setup my Erae Touch to also trigger the same voices (color coded) where the XYZ in each of the 8 zones controls gate length, gate height, and envelope release.

The plan is for the final instrument to be a wooden CNC’d enclosure that will house all the additional electronics along with the Bela and some audio I/O so it will be a completely standalone instrument.

It was a bit of a pain to work out everything in Pure Data, vanilla no less. I did experiment with Purr Data and a couple others, but it kept crashing and acting weird, so it’s all vanilla (+ FluCoMa).

At the moment it’s running on desktop, but the idea is to have it run on a Bela with some additional hardware for CV scaling, controller I/O etc…

I may end up including some of the SP-Tools I built for this with the next SP-Tools release as they would be useful for others I’m sure. Basically I built the stuff for onset descriptors, classification (MFCCs) and meta-descriptors (controllers/speed):