Is it possible at this point to give us some outlook what TB2 version 2 might look like? You mention in various replies to questions that you are working on this and that. The reason I’m asking: is it worth spending time to find solutions with the current tools you might already have solved anyways. And is a release around the scheduled plenary in July planned/feasible?

Now, these are dirty questions  Yesterday and last Monday we were talking specifically about that - release(s) content towards the plenary, and, as important a question, towards the ‘feature completeness’ or at least interface stability. In other words, something is coming soon, but not with everything in it, and we hope to have everything done asap, as usual.

Yesterday and last Monday we were talking specifically about that - release(s) content towards the plenary, and, as important a question, towards the ‘feature completeness’ or at least interface stability. In other words, something is coming soon, but not with everything in it, and we hope to have everything done asap, as usual.

So I will flip the question: what are the most pressing issues you want to work around right now, in what order? That will allow us to answer (or even to change) the order (and give a rough date) in which release they are coming, or at least planned.

I know you know but the goal here is always obviously to release perfect code with perfect interface, optimised, the first time round… but you can imagine that the reason we embarked techno-fluent composers like you in the project is for the contribution we get from you using it, so let us know the things on your todo list and we’ll tell you which one will be obsolete very soon.

That makes a lot of sense. We all suffer from the difficulties/inaccuracies in Max when passing from signal to control and back.

-

helper patches which translate the buffer samples/channels into lists

-

accepting lists as inputs to datasets

-

getting stats on time differences:

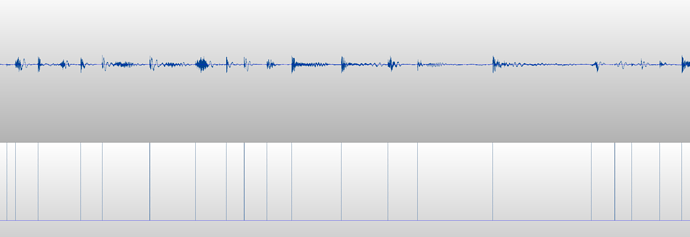

Let’s say I have a stream of attacks like this one and use ampslice to generate the ‘slicing’:

(this is the realtime recording of the signal in the left channel and the output of ampslice in the right channel to demonstrate what I’m working with)

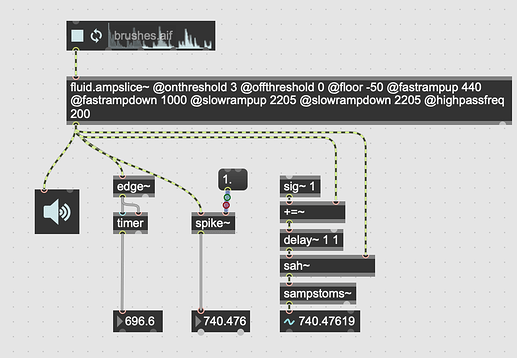

Unless I miss some existing possibility (and sorry for my ignorance, I’m not too familiar with gen~), I can only use edge~ and timer to calculate the durations between the generated onsets - which obviously is not too precise.

Why do I need this: I want to generate IN REALTIME, with the minimal needed delay a stream of averaged mfcc’s, which correspond to the attack detection. I will set a maximum delay (event duration), meaning if the next attack does not happen within a certain time window, then analyze the sound so far and give me the mfcc of that portion (in case of attack and longer resonance).

What is the goal? I’m trying to obtain an analysis which somehow respects musical entities. The data will then be used to match against a dataset and to pick near neighbors. In difference to entrymatcher this does not work with a fixed time grain of 40ms, which leads to very jumpy results, but should generate a dialogue. I’m not too concerned about the delay, as the near neighbors will not be played back at the very moment, rather a few ms or seconds later - kind of a call-response relationship with some compositional warping of the response. Hope this is a workable description. Otherwise we can zoom about it. ![]()

That’s helpful to know this is a high priority for you. Because building this functionality into the existing C++ framework in way that’s sustainable and cross-platform is non-trivial, it sounds like we need to formulate some duct-tape based stop gaps, which opens up some negotiation between us about what level of duct-tape would fulfil your needs for now, in relation to what’s a good use of our development time. We’re hoping to push an update out very soon indeed, so (fair warning) a solution that would make it into that release might well be very duct-tapey!

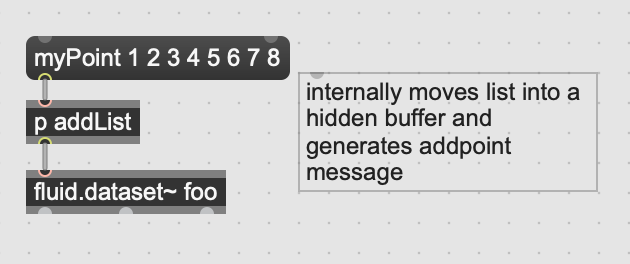

For instance: would having helper abstractions to move between lists and buffers obviate the need to have new facilities directly targetting fluid.dataset~. That is, could you get by with a temporary hack like this?

(except that p addList would be an actual abstraction). This is much more realisable in the coming days than something that would add a new message to fluid.dataset~ itself.

If understand you correctly – you want a signal-rate spike timer? – there’s a couple of ways. [spike~] is built in, but it sometimes be confusing (IME) if you don’t get the reset time in line with expectations, or an accumulator with sample and hold gives you total control, and you remain in the signal domain:

[spike] might be fine here, because it seems like in what follows you’d be back in the message domain anyway?

Well, priorities are constantly shifting

I’m not only thinking about my own use, but also how to teach this stuff in the future. Thus just putting it on your radar for whenever it’s ready.

Abstractions are fine for now. And I will look into the use of spike.

Obviously my concert happened, so my feelings for subsequent releases are lower priority, but I would thinking getting re-interfaced stuff out sooner than later, even if green, would be better, before people start building patches around existing paradigms.

From the sounds of it, based on loads of forum hinting, the change is going to be super dramatic, so the sooner that happens the better I would think.

At this point I’m not so concerned that the next release will break stuff. My explorations are still very fluid and my learning process is neither logarithmic nor linear. It feels more like a maze.

That’s pretty much in line with our thinking: we’re working towards getting something out asap, and front loading as many of the breaking changes we know about. I don’t know about super dramatic, but certainly we’re hoping for a pronounced improvement in usability, and there’s some new functionality already done.