Hi everyone,

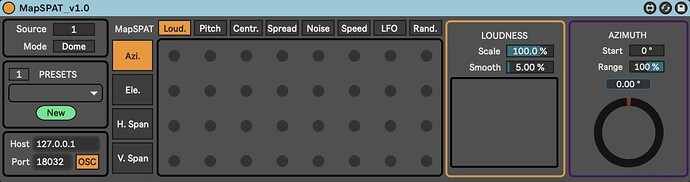

Here is a new tool called MapSPAT, using the FluCoMa toolkit to bring real-time spatialization based on audio analysis:

This is a Max4Live device designed to analyze audio descriptors in real-time. It allows linking sound variations to 3-D positions with a simple matrix and some basic parameters, which is useful for those who don’t want to plan all spatialization aspects before a concert.

If you have some interest in using it, we would love to get some feedback or bug reports on the Github page: GitHub - GRIS-UdeM/MapSPAT: Real-time spatialization based on audio descriptor analysis

Thanks ![]()