Hello all there! I am working on a project greatly inspired by the amazing work of @jamesbradbury and his Mosh and FTIS ideas. Very early, I have run into some problems and would appreciate any experience/approach/suggestion regarding them.

At the first stage of this project, which is itended eventually become an electroacoustic piece (hopefully), I am trying to build a large corpa of databent audio. Following Jame’s mosh, I wrote a very simple python script that does the same (converting every binary file to a valid .wav). I have tried converting very different files and got some interesting results. However, as it is excpected, there is also an overwhelming amount of audio noise. I would say the ratio is like 97% noise and 3% “nice sounds” I would consider using. It could be even less, though.

In order to build the corpora consisting of these kind of sounds, I want to filter all these noisy parts out. Because the ratio is so small, I need large amounts of data (just for the sake of testing, I have bent almost 22gb of files, but I would like to scale it it even further).

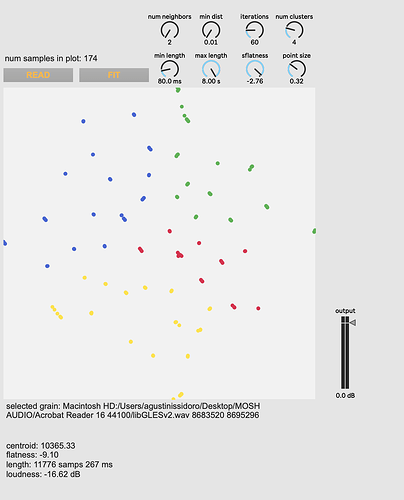

To achieve this, I patched an analysis loop in Max. I used fluid.bufnovelty with the spectrum algorithm (maybe mfcc would be better?) to slice every file into “differentiated spectrum” chunks. So far, this proved to work just fine. Of course, there are some things that get lost (i.e., “nice sounds” that get sliced together with noisy parts).

Then, I analyse every slice with fluid.bufstft, fluid.bufmfcc and fluid.bufspectralshape (then get the stats of all of them) and store into different fluid.dataset. I am passing the “absolute_file_path position_in_samples” as an identifer to keep track of every slice.

I’ve got so far and now the problem arises: how should I classify all the slices into “noise” and “not-noise” slices to filter them out?

My first straight forward approach is to train a MLP for classification and label every slice. Then simply batch process all the audio files in python to cut the noisy parts and leave only the good slices. This should dramatically decrease the size of the corpora and also make it more managable for later use.

However, I am skeptic about this being the most effective way to do this. If so, is there any other approach I could try? What type of analysis should I use to classify noise better? I was using fft (as noise is tipycally described as “even power across all the spectrum” I thought this would be more straight forward). Or simply perhaps there’s a totally different strategy someone else would try to get this working.

I share the .py and the max patches just in case someone wants to give them a try. I am open to any suggestions and critiques are very welcome as well!

Thank you very much to all and have a great weekend

Archivo.zip (18.6 KB)