Posting about this here rather than as a github issue as I’m not sure if this is a bug bug, or just a gross misunderstanding of @padding modes by me.

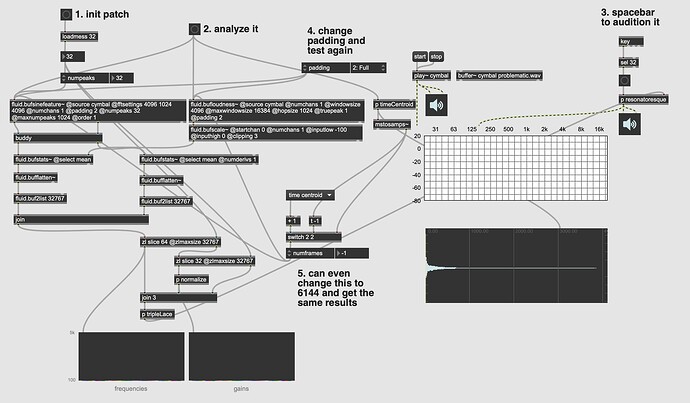

So as outlined in this thread over here I’m getting some inconsistent results when trying to create frequency/gain/decayrate tuplets for a resonators~-esque process. For many samples it works really well, but other times it’s a bit out of tune, or completely off. I tested a bunch of diff fft settings and other things and then decided to try out the @padding modes and that made a massive difference.

I seem to get very good/usable results when using @padding 0/1 but the moment I turn on @padding 2 the numbers take a dive.

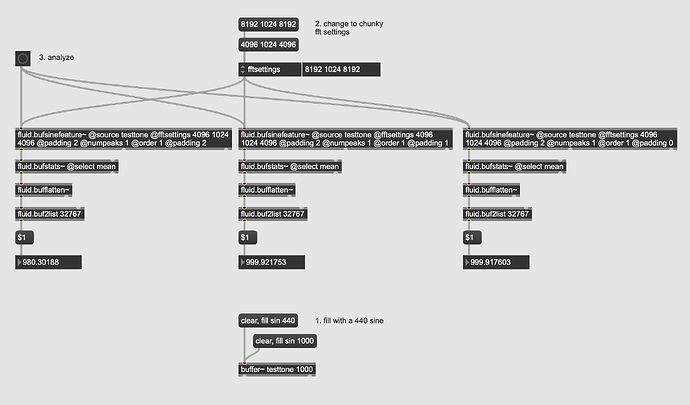

Here’s a simple demo patch that shows what I mean. In this case it’s doing one sine wav only, but you can clearly see that it’s out of tune when in @padding 2 but ok in the other ones. There’s a more comprehensive patch in the other thread which real analysis, but I wanted to show exactly what’s happening in an isolated manner.

Is there something wrong with @padding 2 in fluid.bufsinefeature~? Or is there a technical reason why that padding mode (which I use everywhere else) specifically performs poorly in this context?

----------begin_max5_patcher----------

1143.3oc0YEsbapCD8Y6uBML2G8kQRrRf5S4+3N2IirsbBsXvCH2lb6z7seW

DlD2DHESDTW+fADRb3r6pcOR78kKBVW7foJf7Ix+PVr36KWrv0TcCKNc8hf8

5G1joqbcKXSw98lbavpl6YMOXcsGERz45rG+OS6sxOtOMOyXciicpwCZ6l6S

yu61RyFaCtLZRHcEgEScGXp5Cb7Bx+9xSp3ns8QQO0Z5VGvEq+7eKCpa5GKW

V+2pOHS3gjM2qyuyPrE3YGy+xijc6rjJi0hu4UsCHKM2ro3XtaT7V9UZpvGp

1lVjeam8XnlEAD0XOhO6PTzkXVD9zrvBI6RyxHeK0dOQS.fRpP9ELNZIRDmQ

qKyaCigV6MUU56LugVILEGCA4.o9rNYCue1.b4YzP43Dm2KYZsKMMYe7fo4w

DD77.NimQ9jm.UIa3Y8YikmfZB3IeL7bWVAh4kF8Em3Rx.JtiNz2RjcEk60t

dK6jZ7do1JRvZLsQmTT9bj0AcoduwZJu0jqWm4FM0i94+hco91VahfGpN+GD

glFUnvidZ4nBoyMeCG7aX5trioaCWeb2tLs0ZxeZjACQwIgfJlKDLZjhmHU.

FhS+Eg3uabP2jGlBxyyRqrjHdrLdrNdlHLFRj7XzBnjPDSUO0PM1430uPca.

DSgAnBK1V8D4lJSFxLxdiNejFhHfExAQRBWJDIpHZLZGjQ9OPPNI1ArX7Ni1

drzTaMJNVtAUvXpr1hbC4FTASq.FxOWKfbyA81s3MHbxMH2NXzeohvH2TTt0

TVeR68ouqzmKbZGGmhgIYvIcbZbhHF.Qs9FmVvHQul6n20b2qIOd9pwbpV4r

WiQHtdqwzZSlgZLB40TMlSDetpwHhuxpwz53mqZLhjqzZLsAByTMFg5OzZLL

uTi4j0ddqwPGiIe8wZi2EKcENaKS3P6+W5zn9qk.yUsjMYFc4plsV.CuvPFJ

8hma0rrTIqYQpTlWWdpfGLg7E.5HSkHRb9cE3W1x7Xhi5BmlxmdIEQud2e8b

Yoz4VYb9XSVhx4PUWumDJPMiaEA7aQkHDeEuSDvbIRDRtp1HBXN0HxXQWaaD

ALqZDYL905FQ.yoFQLY7enhD49YiHf90HB7IQinZT4cvrJkGSGYcSVRRSjiG

zIT+hz3rewIFzAKGZIF2C14De02fzQm51+YqRSvzoWqlOQxJxK7YKFjkl691

am0GUSe5ztOTb3yDNpAfSc90y5jaxQ+0t8JxztQl8gPtdsF+lH8vfdRX8qHT

OPK8PL0qd+6FIgOhdkCxbJ7ARxgfD3CjFRJFI2GHIFBRQd.IQxPhH7QrmXH9

IgOhHDCIAlH1GHMjYtsl3oO8jJo6zS7IubvDg7PBNqkt5A66PJqC9HynfMWH

Uu3lA.kOBOgg3ofXuPpgjE9Yp+wvhRGDVuMQbiZV8gCe0TVcp6NXP07etvM0

HYk6xz7lKEtKKMeMss+t0vDnKQw7VTIOtFJmp5GjMaHav9BbRVNtXfFAHHAQ

HcqTHGUZWcP2vE2BJV9ik+OvQ3RTb

-----------end_max5_patcher-----------

(unrelatedly, I’m getting an instacrash with this test patch, which I did make a git issue about)

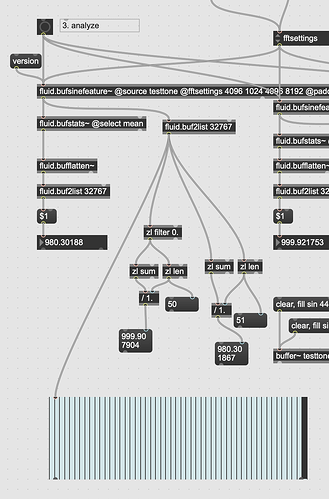

Yes, I think it’s just the end-padding frame (that ends up with a frequency of zero) pulling down the mean:

If I take a list directly out of bufsinefeature~ we can see the last frame is 0. If I filter out the 0, and take the mean, there’s a better estimate

1 Like

That kind of raises more questions.

Wouldn’t the behavior happen on both sends, since @padding applies to the start and end of the analysis? So in that case shouldn’t the first frame also be zero?

Second, shouldn’t both the and last frame be a non-zero value since it would get pulled down with the zero padding, but presumably a hop’s worth of material would still be in there, so it should be, at minimum, >0?

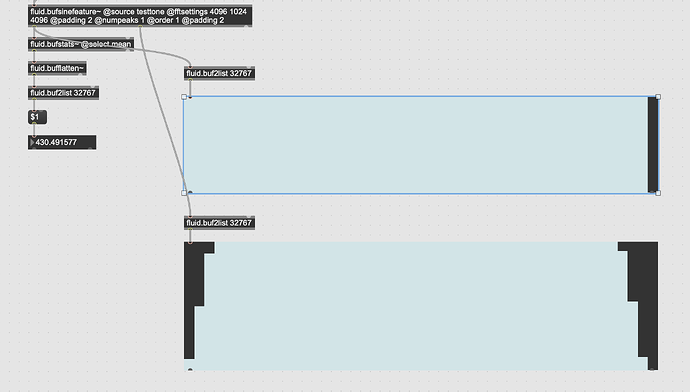

Actually testing further, I suspected it may be starting analysis too early, and having an extra frame left over, but when looking at the output of the magnitudes, I’m wondering if it’s analyzing too many frames altogether.

What’s notable here too, is that even if the magnitude is low (the first few frames, and last few, before the final one), the frequency estimation remains the same.

So there’s basically a final completely empty frame that’s being analyzed.

0 frequency doesn’t necessarily mean that the final frame was empty, but it could have been so un-full as to end up with no worthwhile spectral peaks detected. Basically padding 2 will always make sure that the entirety of the final frame is accounted for, even if that ends up with a padding that implies a very sparse frame. So, possibly not so useful for frequency analysis, in the absence of mirroring. It doesn’t apply to the front because, when padding is applied, it’s always win/2 IIRC.

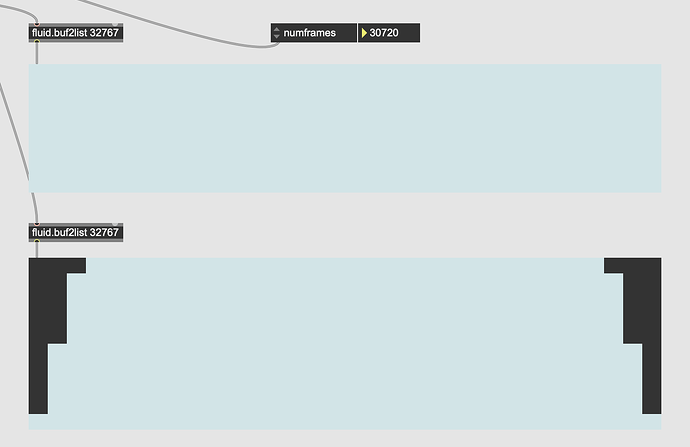

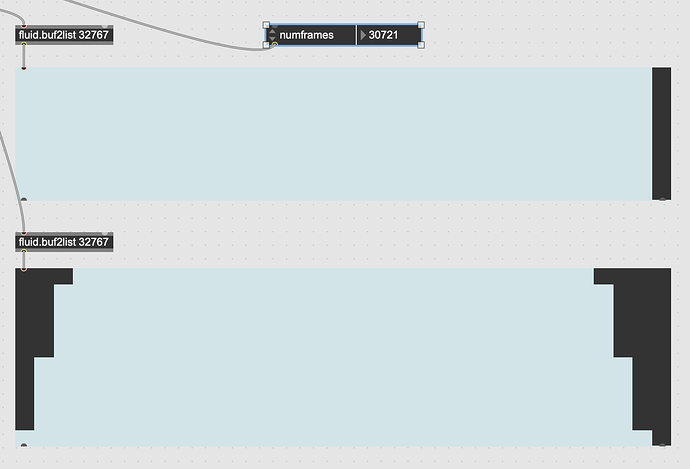

More detail. It appears that it’s a rounding-ish thing where if it pours over by a single sample (over the quantized hop values) that the last frame end up being garbage:

numframes 30720

numframes 30721

It’s not garbage, so much as padding 2 fulfilling its contract.

2 Likes

I’ll try and narrow down the behavior in the larger analysis patch as even if I manually set the @numframes to be a multiple of the hop size, I get radically different (and worse) results when using @padding 2 on a realworld example.

Ok here’s a stripped back realworld example:

example.zip (126.3 KB)

(basically a more stripped back version of the patch in the resonance creating thread)

There’s a few more moving pieces here, but the core thing is the same. @padding 2 is giving weird results. And in this case the average is pulled up quite a lot (a tritone by the sounds of it), and changing it to a multiple of the hop doesn’t “fix” it like it does above.

This is the same thing, from what I can tell: in @padding 2 the final frame has a bunch of 0s, meaning that the analysis couldn’t find the whole 32 peaks in that partial window.

If you switch to @order 0 (by frequency) it’s a bit easier to follow. But, with all those 0s, when you take the mean a bunch of them (20, in this case) get dragged down. A quick & dirty way to squish this would be to set the @outlierscutoff on bufstats to something conservative like 0.99

1 Like

That seems to help, but not as much as just turning the padding off altogether. I think for this specific process I’ll just go with @padding 0 since it seems to work better without having to babysit the output.

It does mean having to go back and re-do all my corpora again though…

Yeah, I would’ve thought that for frequency analysis in general and of percussive sounds in particular, the padding isn’t giving you much value: like, do you really want to catch more of the onset transient and the quiet noisy stuff at the tail?

I just got used to the padding (philosophically more than anything else) from the earlier versions where that was the standard, where “every bit of the audio was analyzed fully”, but for certain things, it doesn’t really make sense.

I’m still confused/surprised as to why it would freak out (i.e. give me the results I want) in the way that it does, but that’s likely a function of the pitches, amount of partials, and maths. And who’s gonna argue with them?!