Hello,

I am trying to build a dataset from a gesture controller (Leap Motion) in order to have a robust identification of a few gestures.

However I am not sure about a few basic choices/settings.

The first question is: should I use a MLP or a KNN? I have tried to follow the example of the fluid.mlpclassifier~ helpfile but my data (with a dimensionaity reduced to 2) do not exhibit clusters of points as easy to identify as those of the example. So does it mean that I should use a KNN instead? What are the criteria to choose a system or another?

Also, I don’t understand when/why one should reduce the number of dimensions of the points in the dataset. My points have 46 dimensions originally and I have reduced these to 2 dimensions to fit the fluid.mlpclassifier example but I don’t understand if there’s any obvious reason for this reduction and which number of dimensions to choose. Two dimensions seem to be good for a 2D controller but obviously the real data coming from the controller after training will have 46 dimensions. So what’s the right choice here?

This is a cool problem, as it is far from trivial. Let’s unpack together so I can help.

- I think your first problem is one of encoding the gestures. What do you analyse to generate those 46?

- you definitely do not want to reduce to 2d if you don’t need to. We can talk about that too.

- what ‘triggers’ the gesture recognition?

So again, knowing the data/application will help understand the task which will help help you (and everyone else reading this now and later)

bring it on!

OK. So the 46 data are hand and finger positions and quaternions as well as a few pre-cooked high-level detectors (grab, pinch, fingers extension flags).

The gesture recognition should match the flowrate of the sensor (not totally constant but can reach 120fps). I don’t want to reduce the flow because I am using it to control some continuous interpolation too.

Without NN I can detect some gestures yet but I want to get rid of false alarms (which occur for instance when we are in-between easily identifiable gestures).

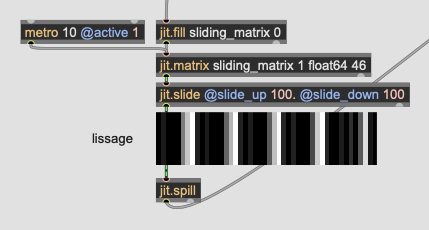

ok this is fun. What i would do first is to augment the number of dimensions with context/trend windows. I presume some are doing that already but try doing a running average of the 46 (the ‘context’ of the last second or so)

then, with those 92 dimensions, I’d run a PCA on it to lower the dimension count - redundancy will be taken out and you know how much you lose. There is a full thread on this on the forum if you want to geek out, otherwise just aim for various acceptable representation (90% 95% etc)

then train the network on that. I reckon you’ll want a classifier. So you go from your input data using MLPClassifier. If you training data is a good representation, it should converge.

Try this (with and without the trend average), and see how it goes, and come back here. If that doesn’t work, we can brainstorm something else.

1 Like

Do you get the 92 dimensions by combining the original data with the averaged ones? Then, why putting them in the same dataset rather than comparing two different ones of 46 dimensions?

yes, 46 after 46. if it was 2

a b - a’ b’

for a 4 dimension set. that way you ahve the immediate dimensions (the first 46) and the trend dimensions (the last 46) for a 92 dimension single vector

you can do that with fluid.stat for the RT detrending

Nice! I took the Jitter diversion. Will check that one.

1 Like

Previously I had a look to the fluid.dataset helpfile for dimension reduction. It uses fluid.standardize~, fluid.mds~ and fluid.normalize~. Basically why choosing PCA instead of MDS? Also, what about the question of my previous message about the combination of raw and averaged data in the same set? Is this combination mandatory to get meaningful results or if it’s for the sake of comparison, how do we compare data which are in the same set. Wouldn’t two sets be easier for that purpose?

PCA is just rotating data to remove redundancy. It doesn’t distort the space. Less efficient in term of representing radical dimension reduction (because distortion of space is important, see UMAP discussion)

but it is simpler and easier to deal with in real time.

and will allow you to process your input points (by opposition to MDS)

in effect, you just remove dimensions that are redundant to help the network.

===

Combination is not mandatory. Actually, nothing is. FluCoMa is about trying and see what works best for you.

I would try just the real-time 46 in pca. then the 92 in pca. see which one converges and has less errors.

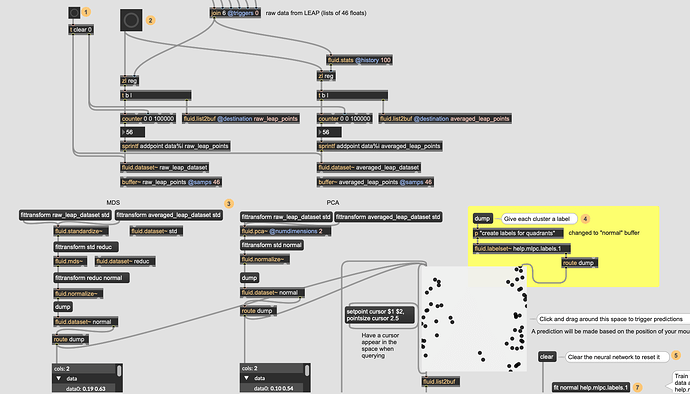

All right. Now I have made two datasets, one for the raw data, one for the averaged ones.

I have then the second part of the patcher where the dimension reduction is performed and I have merged it with the adapted example of the classifier help file.

However I still don’t understand how to teach my network the classification. The example relied on a dataset with easily identified clusters of data with clear boundaries on the 2D display and this is not the case here so I don’t know how to define my classes.

Obviously the question is: how to build the labelset and associate it with the dataset? Currently reading the helpfile of fluid.labelset~…

OK. Is this a correct way to associate labels and data in a supervised training?

It seems to function actually. Now the convergence seems to take ages. Tomorrow I will try to feed more data to the network, then I’ll be able to compare raw vs averaged and MDS vs PCA. Also the question of the number of dimensions remain. Obviously 2 dimensions are useful for display.

If a training has been performed with data reduction, is it possible to predict the output of the NN using realtime data? I mean, data reduction is performed over a dataset. Should I create a new dataset for each new data coming from the controller? Is it actually possible? Or is there another way? In between I’m going to try a variant without dimension reduction.

After quick tests I can tell that dimension reduction makes the convergence of the NN much slower. Also, without this reduction, averaging accelerate the convergence too. However, as my question about realtime dimension reduction remains, I can’t test the NN with dimension reduction but the NN without dimension reduction performs well so far.

One issue to solve is to avoid non-significant gestures to be identified as such (this is the very reason I’m giving a try to this classification actually). Let’s try to create a new class in which I’ll put ambiguous gestures…

Yet another question: what’s the best choice?

- Predicting raw data on a NN trained with raw data?

- Predicting averaged data on a NN trained with averaged data?

- Predicting raw data on a NN trained with averaged data?

- Predicting averaged data on a NN trained with raw data?

OK there are a lot of confusion in my brain now.

- your data is clearly segregated in 2d, which is quite strange from 46d. I would expect something messier.

- the idea was not to use averaged data only, but instant contactenated to average, to double the number of dimensions

- the idea of dim redux was to help to remove redundant information (hence using PCA)

training a classifier with such segregated data should be fast… that is, if all points in a cluster are from the real same class. yes, you need to label, but your original question was how to segregate 2 classes so I would expect your data to be labeled with many examples of each gestures…

real time data will be possible indeed. a class for ambiguous gestures might be good in the training indeed…

in other words, let’s start from scratch what needs to happen, like in the tutorial on classification:

- you need many examples of each class, labelled.

(try and see, it might work there)

- if it doesn’t converge at all, 2 potential problem:

- your descriptors are ambiguous - you generate noise instead of data that can be separated

2.your data is super noisy and complicated

if 1, you need to find better descriptors. machine learning only works as good as what you feed in

if 2, you can use PCA to try to remove noise and redundancy - the more examples you have the better it will behave. the fitting tells you how much it ‘loses’ but ‘losing’ details is the whole point of denoising too, so you have to play with it

A good news: you can test for the quality of your description with a KDTree. If you fit your training set there and query a new gesture, are the nearest neightbourg in the right class. if not, then you are not describing well, or your dataset is too small to represent the richness of your classes, and any further process will be bad.

so test your description first. in high dimension. that is for me the first part that seems to bail.

Actually two classes was my worst case scenario, I was going for 3. With 2d dimensions 2 classes were mixed on the right side of the display. Also, I still don’t understand how to reduce the number of dimensions in real time.

Actually I have reached satisfactory results with the 46 dimensions. The “garbage” category helps a lot to put aside ambiguous gestures.

The convergence of the NN is good I think. It goes down to 4 decimals in a few seconds when pushed by a 5ms metro. It needs a few minutes to get 4 more.

I can’t spend too much time trying to improve the patch further because the premiere is approaching fast and I have many other things to do but I am happy because I have a much more robust and flexible system now. My concern is more about the lighting conditions. I bet I will have to rebuild a dataset on stage.

The code is pasted below.

Thank you @tremblap !

----------begin_max5_patcher----------

4558.3oc4cs9iaabD+ym+qfPnEnE8pv99Q+jMRLJLPgafQPyGRBB3Iw6LMzK

Pwy1IAw+s28AoNJI9XkDGJdNMMRQj5Dm42Nu1Ylc2e+E2L4t0eNY6jn+UzOF

cyM+9Kt4F2krW3lhOeyjkwed1h3stu1jkIa2F+Pxja82KO4y4tqee517xKtI

Ne16SW8vujkLK2+iKo5oD9sQXj4+.YdW6diPlhh94h+rUOtLc0hjb2Ch7zEW

+Xd4UwEWMct6gt9tO7OwJV4y0+Ey+0MI9G5jIQ+r8N+wKdg8kaOOVrNtgirT

uVIsu4+PuvJjdlULOx6RxZgSvXFxMvnkl2kl+QvjBrlvjraijtAIVibFtVNi

TGmgdhHxhWljmj8KIqhuagi+PE2690qx2l9atqQsnZSfwsQStKd0CmGnb+h0

FBtEPghXEBqLlSXkRaeDtcbvb04IyRWFuXyh3Yd0ML5XPRDFDksL1QmBXPml

Tv+TVZdq5DRhWPB4TGnr9RoP225DIex76dD+sIZ16WudaxuLONOtM9j4ENTb

mxg2DPOvmLZa7YU5wnP64tB1yHTjtH4iIYaSWupxu9MSh2roxkuoxehES9vZ

2Oj91cWJck+RncWJK4iok+8xcWMNyfJ4FH4wLGMN4yhRiv1el0ySxV8XpiT7

WzL5TPRtwgUFw6saL5Bt+X6vU4sqnDxdRLBq3D26HbEf9lIOjkNuzdgwVF28

c3U+F64eqJ+WUJYuq2lzx9RLeXc5pHQzKyyRe3ACLGgp90pQzw6n.KEE7xQV

UNV7Qr+MNVD5PwHBsJQzjJyMkpMECNmI33nyfXZl2nvtWaliQmJGiQ7VY43U

+Ztkllr2yb15kKSV4GHq9mmtZdxmqH4NX3ToyF+q.gTRHPJROhTdZJHQJBgD

JRgaDoPMgT5IWKgkVr37aKhbOxkwahPQ3HRDMhEwMFgvlOh6.1T3hnYJLCw6

17CoQfiz.vIwsa+4191FDXvkfPG.3B80BbwnD3gKg9qE3hvFB3R80BbUD5Dr

nkb7fVYlGehAenFbBGXfkZr6UI6RBqTzD3H5FbN7euFhVUErjF4pHLIv.u.V

3helBWOMWlEoqZZtLN1xd+5AxsqeLaV4ipbVBQ6yeyS1lmtJNuXxd+Xk.gre

yfFIOUxvIUYFtBfNrNJAlNHgPGB3wCbPzgBb5HH4CqQS3nC6T6BjNDfRGxfo

CNniKgRGkJ3.QFpSgLvfQF5SgLHPQFkllBiLnfQF3SgLXfMnvOExfCpELVPd

VvMppTbQ+U5szLa+Jo2m1ZcYdpDD9rZJjcTurcETX+vQn0jP8cYApkv39SeF

m4Z43Kiya2jktJ+9n4IlgsjEw2krHxVth+ZZWSNG63Fo1OoSV.YdFexYWTLf

Yd9tGyyMxHsy0TSD8FcHgR4XWV4q8ISy2mFZrvc0CJUJSWOAL6W9t8Eel+3x

MclEGmfhP4L2P0WxTgZBxFxJTDrxzl0lODlxDQRfWYBON.o+gg+TcolIHV0L

ImAmLS6oIM0la9ABQd72RsoU.ElsGIAWeKhbJfBsdPo8bg5Lsb6Su26XzEZo

onCfDbEXRMrATGJnBWgwLm4UsFtRWQNoBWQGZHP5CjEiPJ3pd2Uq1csoSjkD

OuK4CJxEQIW6MkRAPofqGPsh1vipMTTm.BiWeSE0C.h.MR.j.DPXJm6DAl.m

7g7Yj7QAdvETvDO3pmOhGDeu2Ups.h3gXbDXpK38n3OljYvq4tl1aamNeHRm

SGAy2AVT7kD4dSNeLFXGxZDZC0JxkRhv3cr6Mc.pJzSMfTr.EVQBuRBItDlz

sTBuXNvkUnmBhTBZvkRBjs8cIjPCQUPYinFRnb9+wymWMSZQODmc2A1fqspw

9X349t3DKkPjE.Fe.s1VFobcf02+tW8l2F8eeaz6d0OzkbjuwnkHlucDb3RO

GfOcnrwlGcWDqytuv6tE4bm3aCpyUPnIEGhHnI6OnSxeSVx7zY49Lm8W5tMK

HNOOxho.CQrZ5QRpEuewioymtHcaN4tGuOTfwmpHPLjnaOUHFh7daMdFH34g

XiGotSgVIvHJrzR.IwhjQR38ml1TQZTjdPADkIk5YnxTItPDfoKozOC0kJvE

gVAlpjBMRhU46d2q+127MeuMTkZhXw1DAyV+nOifzN5XduuJA0WCd+5yP1qg

xHv8YjL4qe3gEIAJKPz9bMV9ZOpivwWTYQGx54XHrr0Q7Nybu1aQA4jHXfTj

q.JnSuhJAIsvEEQmnAqH5DzWYRKkXlW2BDgkPlPvfE6x8oUyxRR7lx7wE49P

f4cYmNFqv8DFh7WNRB2yBYYwe57QqRYLeysf0HHJMx3pSMNLSMF6WI4ueWez

0DRoQCPlZ3ibrZ6lEw+ZRWEPP5wncPEGDnhONfJatcncUfIB741gytN41oCn

gzYa+H7PCAPngNJglNWbeZZEngylZ2RUPbA19uTIF.fhL5.J2LSYcu107ZXL

kKxRthOEyHJIUioHlVgImuWMVSfEN70x101tck8UolQPVUi1gDt4YTIhwUpU

KhQ5KmVHSE.kukyLtrgvjEiMdJwUdzLC9j0o8JbgnCsv6OHUvncu9NBEFSTU

2CrZtQi7cuJq.BPP.AX0EMO2C1WmFTaRUaO5Clpm6VaCDe4XuRGm.RSSqGGF

ob4WLIysN2wH6+KTwOeWQhwA.O7S1uGo6tnthqugNnpEAhQT+DeMQIL0se0I

EDhlIEZJ.FsvAEV0.68qHcAeo9btDplH2O4NjFDq8jwiWPeEY9Rslshd413k

a1FwDcAaDebpDr20.kCBr0dTV2uXcb9f6FX+BvE8xJqixyxU.y2IZE15LJvP

3JfLpp38usHJK4gPMv4WLDTEDcfEVLvJlsUotr3O4hoH59r0Ki9Ou9UeWzey

JmsMZ88FUxHm791+dmMbj2g.op9YeuefImLjJaayis1lduAKVm8q1XHBL24j

hMWvKqoEaZAGQtFs2YHguSzE6cdbvhdmPwecD89tpNDj05BjkWtYUhAvXsI9

smowsWJ24Wd0vD1NgndNG1dADQKV2WCPT6jNR0.LQs2Z2yZLjGY9+4uOIpnO

uRlG4xZ0zF6MERGU0xm9JMoXmwkVtjO5S2dZ1jAChRh9oIIYYqy9oIQw2aUC

uOM2Dn4CNXyElP7p4tO3y224hbb4dHmOeo8NxwmLXURtyLDq7ls09d3.STPz

PBnQREP8K3Aa80q1Rkg0STJeUHHHJ.KOFBRdEhepMQmhjtFxNuXwJKyWMT7A

3h8G7COZlU38oy1s+5f6QQq87.V8TBfr6TB3Z2PGFBAIPntJnLWg7BZ99TU.

hlndLUqlkK17zVdzWrSpY97jU1tQHaqc6SOdVd5G8YS.G8RqD4pLaoCQSMeb

4ZqGiGW5+zGiWjN2+UCrgWUBjuSrfHcMD7HZGC8fTCdXuEEXX9ESkVpfH6VJ

w3KofGLanSNefE6l+DDCDD64V9.OsIWVLuoh2HTADYBTpe1kIvx4L488BSh.

kCsx3ktV+KVb6LehtfYs9ydNs2XPnEKXL3.DA4OamlHpN43Q8gHhOMcPhOz9

DeHCL9TzXdPhOrP5K+q0VzTHXTQecBIFw6aLhMvXTQaACIFI5aLhO3lpAGij

8MFIFXLR3yAIfXjF0m1qkCL9nnfiO59DeTC9gFFQA9wqVH68lmBHoG7fhzBv

AIbOCRXzPuyLpjX32YF2O+nmU7gfbfnfC9.uv1e2.dPSD74LwQew0Yy86S6H

XHsP2W+A8.RIzy3.WCHNv.TvzlpkAOLLzFcTHdqC8T1AMJzwvC8vD6TkffAf

HgiPfdJDQBWaGTAFa+jD1oxjiN1WBeY57hbgWbjQvr6K31lekQn19fAy0bMh

orsh.FU1YrGbygzPlq+YB6Hbg.43+te8.NJYvihweOEGz3uYjVIXJARPjTJi

K36x2eM2aP8UD7vecNYOjcItyF.NSMEKEHphHURJgwz1lTUPa3lCpe6v4WFK

X9UOUwoXk1LAMoVyoLyEozFtW8rK4Zyt0DmRCrqQnlPoHhfg3HsTik1oNPa3

d0ytzqL65plePrqx7lVoHJr8HZxtCbbvEGR0UUvg1Qf7noamI3tw4gNvbWeW

El+aDcviLGEbPNfNwJTvw7gHPNCA8opsBiRUvm2iDHiDWEbVI3PNsMUvdL3P

drSpBd5QbHEQUjPEQQPFcrfLJzT35SMXQXPCznfL3xQQdV3pwAYHFE4yfORR

qhSFMnvP3ZvoCz0mNTgeXWCY5czgJdngz4llNJLmqIAm5OAfGsypP8pnfzEq

RMNBFMT8UEjpqhwQ3FgNaIEjlL3iBrfD5PhXbLueht4IWStxDmaOg6.mQGj7

mh7YwjxoTtRvXHECIoBD0txeQnohJ6acBsp1u5PlZg5.7qQfOjvqehDRKX0k

full4Fn5sHU+kRRt1k7QJdplpIDIWgQDlM+qk4k7naAFaEN7pORf.ZcgfyAn

acr1DwAUBJCE3.c9IgZs.xzH6VVJA5NAT5fKtfhBMa8h0Y9uiqhd9kPawKso

MqwXeIhP1ZlHEZjPP0JsfVo7QGcOvv.90.CjkkPCMkiPTk.SYHBQwj6gAGbO

vvffSdfrypkwntZ+RYFKxXa.AbJQnPH9thkc7sFzZQ3XVbvgK09.N1OpKQXs

.24nNiIc.ffOkI0LhIFJBVSjNAeV8255OliCdL2H3hkLgcrEqXBDR8TAvq4l

Cam8Q6OYbpj6YI0TiMTAkn0JBWKopmDxO9dCqTNsejxKDvEbaa5nHXgTQIjm

rz0JL4CNiJzSIBlYTmR4bkjSwUj2O5dfIvS6OA9cB.iYAdROJviHdVRLkZML

IIZtfIDBYEA9it2vJvS5Ky5LgjvjbiAcN0LtcBd0sJ8jC2E7oRlUNvhIFG4Z

Nxp9TJ9e78.S7mzih+khCiYwebn8gKGzIbTSNWZhN3fSGzfhyEd5Hr3sgrTD

tSo4.yZFn8cK6pL+CLFsaRXTlTvE1t7pxLOJuHXrcvQgy.s8qYzqA7uCnEF+

EZE27enYBNgKtsk6AVq+J5uHE33ho+YhYDaL7KqNuucWaXa0WQOFJLN3gXhe

tdbitDRw0TI+I29Ut30ePEG7fpQxj3GRq5kmz7HJBv0LQXqUAPWHa3wQeAsa

EpDvXMrKbsfk4HftVFPgFqqFzEBF5jLqh6XJ3nvlzsfh8Fa4SoRDkioXMAgI

Z6TQ7Ycrl6Mjcqt9z5B2AsDJASaJTyjF45RZ5qTymGzP5oQZEZl9q3d0StNh

ox5B2SbGbv+l7476dLO2778OtZ2GXProV+YZlwOlaOalYOF6Ly+msa+.s51r

+AaqnQu8sk+30slwqeKSc+ip.xSTWia15MuMp9zFgMHnig0cniDcVnS7hEWJ

7nuNvy96aYU12cShm2BfYWeL1IRo8XywGPA0tUkU+1uP8mpQMt+jchL39aS0

U1hpe2qdyasmn7u5+8528p+8q+1V3VaNtbrqp7.YfcjDQ.i6n53W+IF+IxTc

JPKDHOA6OOV7GxR1WOE5stwG54oFWYKsrmF+dHN6tJBt0gAbtGCX9cCUZuMl

IlziLx28tW+su4a99n28penNww51KzqeGjG641hiP5hCxU5kytTz4vt6cbzW

qIDcgHpxetppKe8hEQwmkH546goKNEa2O+qXszevUZe8hYU1vxpMICuMYgga

ihmMyDkiaS9ecT5by2ztMQOO5ASHROl0FDwjNsSkeGzpbGauGzUoRPruxkL+

FRcOadkIGXyqcxoLkFFNkNRbjbv4Nesi1BOFvn8qiDNoOcjr+gBe8ikROevp

bvJ0G7AuO4iJGAFMsMS6cqSqXLsO3BEHlJXHILghIv8jFjiP1e+2xy4GN83B

9ul7PsW+X1XeIuWoPNHCGGA6m1Cmz4CuRwsN+mEcuZizX5brTj9heV3feVpK

8YseSq15yRdw7EI3mE+ReVLYvOK1E+rnA+rnW5yRDtrA4ReV6m7+VeV3K9YE

trwkpKeBCWhKkszCm1EJDIdwQ3m2GP7lMeLIaawW18PLd69fOA85aceLck+i

NGLSxR9XZ42W5tRblwCXtw8mMheqypOK7yRYxx0FC9qdLsvlug8LORmmzUF2

Wa2D64DmC2W7Gu3+CgtCQbC

-----------end_max5_patcher-----------

3 Likes

There’s an error in the code I’ve posted in the previous message. A cable was missing so the number of dimensions should be 54!

1 Like