And for the final bit of testing (or things I have to test for now), here is that comparison.

////////////////////////////////////////////////////////////////////////////

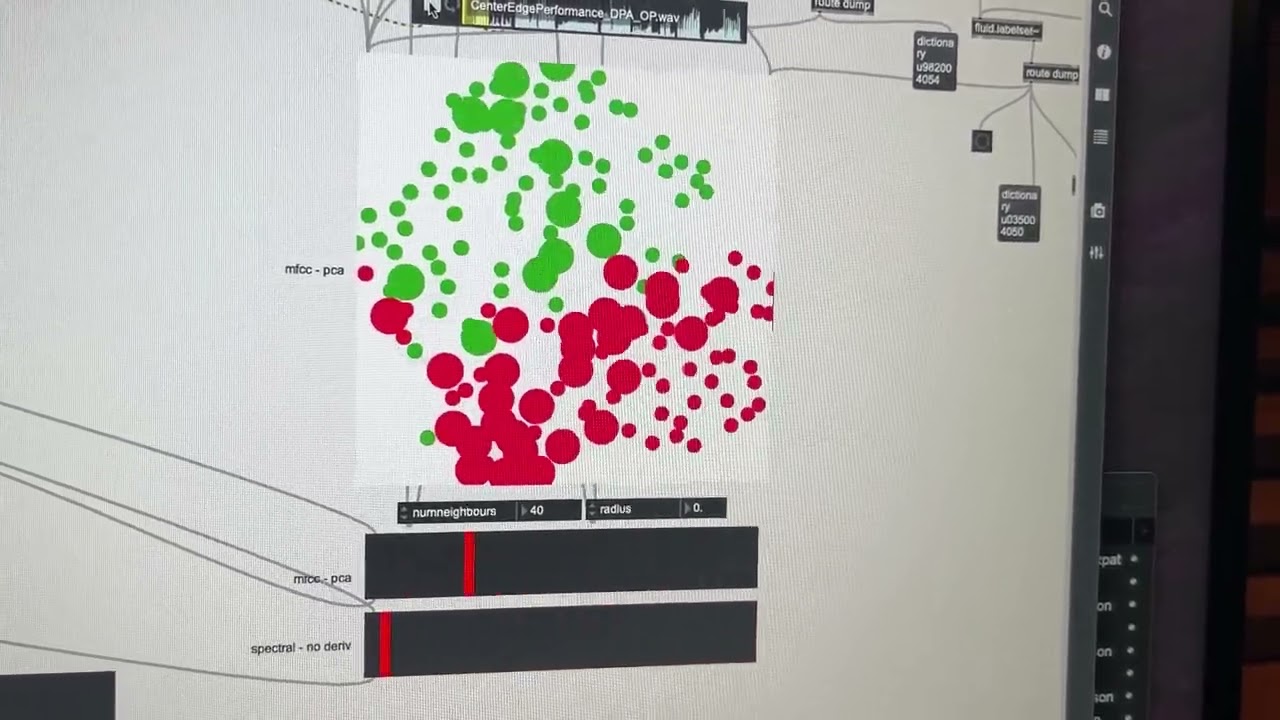

So this is 31d PCA’d MFCC (raw/unnormalized) on the top and 28d spectralshape on the bottom:

Pretty close, but I have to say I think the MFCC is doing a bit better here.

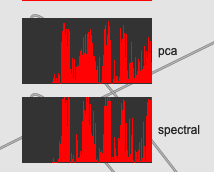

Here’s the time series comparison:

The “dynamic range” of the spectralshape is a touch better, but the smoothness of the ramps looks better for the PCA’d MFCCs.

Given the reduced “dynamic range”, I wanted to try and see how these actually look if I scale/normalize the range a bit.

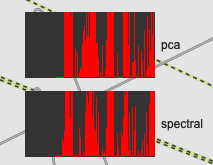

That gives me a time plot that looks like this:

Which I then paired with a more contextual assessment. Which sounds better. Or rather, which has a smoother trajectory when sonified in this way.

Here are the results:

If I close my eyes and just listen, the PCA’d MFCCs sound a lot more smooth in the transitions. The spectralshape one, although looking a bit smoother sometimes, tends to jump/stick to values more it seems.

////////////////////////////////////////////////////////////////////////////

The effectiveness of these PCA’d MFCCs made me wonder how they stack up in terms of classification accuracy. This is something I had actually tried years ago and got absolutely dogshit results, but as outlined earlier in this thread, I think the normalization post PCA-ing was just breaking the relationship between the MFCC coefficients.

So I plugged this PCA-ing into my accuracy test and got the following results (inserted into the data from earlier today):

4 classes:

pca’d mfcc - 97.22% (my best results so far!)

mfcc baseline - 95.8333% (my previous “gold standard”)

spectral baseline - 87.5%

spectral no loudness - 87.5%

spectral no deriv - 88.88%

10 classes:

pca’d mfcc - 87.5%

mfcc baseline - 86.11%

spectral baseline - 66.66%

spectral no loudness - 66.66%

spectral no deriv - 72.22%

A slight improvement, but an improvement nonetheless. And this is using fluid.knnclassifier~, whereas I got better results using fluid.mlpclassifier~ before.

////////////////////////////////////////////////////////////////////////////

So it seems that overall, PCA’d MFCCs capture the most variance here and still capture good transitional/interpolation states. The spectralshape stuff was very promising (though I did dread having to add a whole new descriptor “type” to SP -Tools), but not quite as good as just (further) refined MFCCs.