Hello, I’m pretty new to the FluCoMa tools and I’m simply overwhelmed with the possibilities of FluCoMa.

I would like to sort longer tonal/beat recordings rhythmically, pitch-wise etc. The result should be displayed in a y/x diagram using dots, for example, but should also be playable. The closer the points are to each other, the more related the corresponding recordings are.

I believe that IRCAM has implemented this quite well in SKataRT as an M4L patch.

Is this also possible in FluCoMa and if so, where should you start when patching?

Thanks in advance for any tips!

Hello and welcome!

Yes indeed the possibilities are huge ![]() So I have a few questions for you to help you go to the next step:

So I have a few questions for you to help you go to the next step:

-

if SKataRT has implemented what you want quite well, what is your motivation in doing it again? In other words, is there something it doesn’t do you the way you want/need?

-

similarity is in the ear of the (contextualised) beholder, so words like ‘longer’ ‘tonal’ ‘beat’ are abstract for a machine. There are machine listening tools to identify a stream of numbers modelling some abstractions of an approximation of a human listening parameter, but how you care about them is what makes it fun and challenging. I pointed to a few of these relative questions elsewhere on the forum but here goes a new list for you, only to one item

- is a 2 bar |CCCC|GGGG| nearer a 1 bar |CCGG|, a 1 bar |CCCC|, a 2 bar |DDDD|GGGG|, a 2 bar |CCGG|CCGG|, etc.

Now I hope you don’t feel taken aback by this. These are very very concrete questions that will help me point at the next step.

So first, let me know your answers to these ideas, and then I can help a bit with the next step.

The other approach is to take the 2d plotter tutorial ending patch (which takes MFCCs as descriptor) and replace that by Chroma as descriptor and see where that leads you. You will get a grid using fluid.grid. This technical answer is full of assumptions and is likely to bring you back to the questions above ![]()

I hope this helps!

Hi and thanks for the welcome, I have actually only seen SkataRT in online presentation videos and have therefore only gained my information (assumptions) from these.

I have not been able to try it out yet because it is behind a paywall, but when I discovered FluCoMa I suspect or have the “feeling” that there is more to it and that I can realize even more with it.

However, I have to admit that I don’t yet know exactly what this resemblance should look like. I’m quite open and very keen to experiment. I approach it with the idea of what if…

To your bar question, I would spontaneously say: 2-bar CCGG|CCGG. But the search for similarities depends on so many factors that I still want to discover different similarities. It also helps me to discover my own music, so to speak, and to go into more detail about the compositional peculiarities and find suitable transitions between the passages. In other words, I want to explore the many dimensions of my beat sound constructs and put together suitable passages. This is very useful when composing individual tracks and also at live gigs with complete tracks to refine a compositional flow macroscopically.

About me: I actually work more with Kyma from Symbolic Sound than with Max. I’m more of a beginner with the latter, as you can probably tell from my question;) I want to record individual sequences of sounds from Kyma and reassemble them with the help of FluCoMa. .

In addition, I have already discovered an interesting entry of a patch in your forum that can serve as a starting point (Visual Corpus Exploration Patch). I’ve also tried it out and simply read in a complete album consisting of eight tracks and quickly realised that this creates an insanely dense jumble of points in the plot that is difficult to click with the mouse. The points representing passages could be played on/off and without overlapping. I would probably have to insert a poly~ player, wouldn’t I? Are there any tips on how I can work with this patch for my purposes? In the “make a Corpus” tap, I increased Kernelsize and set minslicelength to 5000. So I have already managed to create longer loops.

MuBu and Pipo, on which SkataRT relies, is a great toolset. The paywall is a problem indeed… but the people who coded that tool are experienced so it is most probably not a beginner’s job. Now I don’t know your level of experience so I sorry if I misjudged it from your first sentence (pretty new) ![]()

All that said, @rodrigo.constanzo amazing and free SPTools do corpus browsing. I don’t know if he implemented the dimension reduction (via fluid.umap) on pitch-class-distribution (via fluid.[buf]chroma) and then put that on a grid (via fluid.grid) but hey, he is amazing and generous and very, VERY thorough. And it is all built on FluCoMa and m4l compatible. So it might be fun for you to try a package and then, from experience, start to modify it according to what you prefer.

(because this machine-listening-machine-learning-similarity-driven-corpus-navigation is better started from some clear thoughts I think - this is the way I got the funding for it all, because of my fights with the fantastic yet not what I wanted CataRT ![]() )

)

indeed. So how you plan to ‘package’ time (per bar, per beat, per attack) to make your dimensions to then compare is the hard bit, the fun bit, and the bit that will bring surprises. So I definitely encourage this kind of adventure!

if you use the fluid.plotter there is a zoom in option ![]()

the min slice lenght will do that. kernel size will try to find similarity on a wider space (see the learn.flucoma.org article to help and ask questions away - I need to test the sturdiness of that material if you don’t mind) . wider fft size might help lose the details and help with an approximation. using Chroma instead of MFCCs will help with harmonic material (instead of spectral contour)

I hope all of this helps. keep on asking questions if it does.

p

Thank you very much for the helpful advice. I will try out the SPTools straight away.

If I have understood you correctly, you would like to test the audio files that I use for my experiments with FluCoMa. Well, nothing could be easier. These are a few tracks that I have published on Bandcamp. You can download them there for free.

Just one more question, I would like to trigger the sound points of the plot with Mira on my iPad. But that’s not possible, is it?

Today I can no longer get the “2D Sound Browsing” patch to work. It takes quite a long time and Max sometimes freezes. Furthermore, I no longer get a plot display. I am using the same tracks (audio files) as yesterday. I honestly don’t know what should be different today. Yesterday the patch worked pretty well.

What could it be?

what is the max window telling you when you open the patch?

Usually that helps - there was a change of interface more than a year ago. The patches and tutorials have been updated but you might have an old version of either the package or your code…

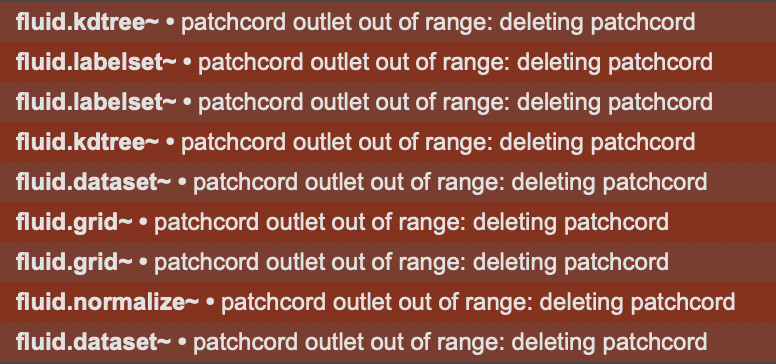

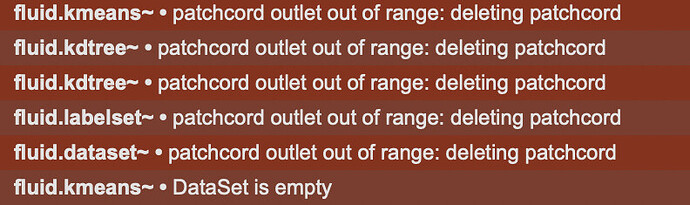

There are actually a few error messages (see screen shots). It seems that Max has removed a few patchcords.

Is there an approach how I can proceed here?

you have an old patch - the interface has changed about a year ago. In effect, check all fluid objects and you will notice they don’t have 3 outlets anymore…

you can also download the finished (corrected) patch from the new tutorial here or watch the videos again ![]()

Thank you! That works well for me now.