hi all, a flucoma newbie is here  I tried to do something similar in SC, playing all the neighbours of a clicked sample sequentially, but i hear a very degraded sound quality, what did i do wrong? thanks for the hints!

I tried to do something similar in SC, playing all the neighbours of a clicked sample sequentially, but i hear a very degraded sound quality, what did i do wrong? thanks for the hints!

best,

Alisa

s.options.numBuffers = 1024 * 15;

s.reboot;

(

// CONFIG

// ~folder = "/Users/alisakobzar/Desktop/string_orchestra/Strings/Violin/".standardizePath; //1636 files

// ~files = PathName(~folder).pathMatch("**/*").select({ |f| f.extension == "wav" });

(

~getWavFilesRecursive = { |dir|

var dirPath = PathName.new(dir);

var subdirs = dirPath.entries.select(_.isFolder);

var files = dirPath.entries.select { |f| f.isFile and: { f.extension == "wav" } };

subdirs.do { |sub| files = files ++ ~getWavFilesRecursive.(sub.fullPath) };

files

};

~folder = "/Users/alisakobzar/Desktop/string_orchestra/Strings/Violin/".standardizePath;

~files = ~getWavFilesRecursive.(~folder);

"Found % files.".format(~files.size).postln;

~files.select({ |f| f.extension == "wav" });

);

~superbuf = Buffer.alloc(s, 1, 1); // Will grow later

~offsets = List.new;

~durations = List.new;

~corpus = FluidDataSet(s).clear;

~reduced = FluidDataSet(s);

~pca = FluidPCA(s);

~ids = List.new;

)

// Merge and analyze

(

~totalFrames = 0;

~tempBufs = List.new;

)

// Step 1: Load and record lengths

({ ~files.do { |file, i|

var frames;

var buf = Buffer.read(s, file.fullPath);

~tempBufs.add(buf);

0.1.wait;

frames = buf.numFrames;

~offsets.add(~totalFrames);

~durations.add(frames);

~ids.add(i.asString);

~totalFrames = ~totalFrames + frames;

("done"++ i.asString).postln;

}}.fork;)

// Step 2: Allocate merged buffer

(~superbuf.free;

~superbuf = Buffer.alloc(s, ~totalFrames);)

// Step 3: Compose all into merged buffer

(~tempBufs.do { |b, i|

FluidBufCompose.processBlocking(s, b, destination: ~superbuf, destStartFrame: ~offsets[i]);

};)

// Step 4: Extract MFCC from each segment

({(~files.size).do ({ |i|

var loudnesses = Buffer(s);

var maxloud = Buffer.alloc(s,1);

var mfcc = Buffer.alloc(s, 13);

var entry = Buffer.alloc(s, 15);

var start = ~offsets[i];

var dur = ~durations[i].min(2048);

var centroids = Buffer(s);

var meancent = Buffer.alloc(s, 1);

FluidBufMFCC.processBlocking(s,

source: ~superbuf,

startFrame: start,

numFrames: dur,

features: mfcc,

numCoeffs: 13

);

//

FluidBufCompose.processBlocking(s, mfcc, destination: entry, destStartFrame: 0);

FluidBufLoudness.processBlocking(s,~superbuf,start,dur,features: loudnesses,select: [\loudness]);

FluidBufStats.processBlocking(s, loudnesses, stats: maxloud, select: [\high]);

FluidBufCompose.processBlocking(s, maxloud, destination: entry, destStartFrame: 1);

FluidBufSpectralShape.processBlocking(s,~superbuf,start,dur,features: centroids, select: [\centroid],unit: 1, power: 1);

FluidBufStats.processBlocking(s, centroids, stats: meancent, select: [\mean]);

FluidBufCompose.processBlocking(s, meancent, destination: entry, destStartFrame: 0);

s.sync;

~corpus.addPoint(~ids[i], entry);

("✓ added sample %".format(i)).postln;

});}.fork;)

// // Step 5: PCA

// ~pca.fitTransform(~corpus, ~reduced)/*.whiten*/;

// s.sync;

// ~reduced = ~corpus;

~corpus.print

~reduced.print

// ~corpus.write("/Users/alisakobzar/Desktop/string_orchestra/violin_corpus_dataset.json")

// ~superbuf.write("/Users/alisakobzar/Desktop/string_orchestra/violin_corpus_superbuf.aiff")

//umap

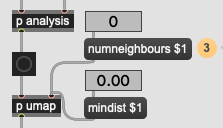

FluidUMAP(s,2,numNeighbours:10,minDist:0.1).fitTransform(~corpus,~reduced);

FluidNormalize(s).fitTransform(~reduced,~reduced);// normalize so it's easier to plot

~tree = FluidKDTree(s).fit(~reduced); // use a kdtree to find the point nearest to the mouse position

(

SynthDef(\sample, {

arg id, start, end;

var sig = BufRd.ar(1, ~superbuf, Phasor.ar(1,BufRateScale.ir(~superbuf), start, end));

Out.ar(0, sig * EnvGen.kr(Env([1,1,0], [((end-start)*SampleDur.ir)-0.01, 0.01]),doneAction: 2));

}).add;

)

(

~reduced.dump{arg dict;

{

var query = Buffer.alloc(s,2);

var oldval = -1;

~fp = FluidPlotter(dict: dict, xmin: -0.1, xmax: 1.1, ymin: -0.1, ymax: 1.1, mouseMoveAction: {

arg view, x, y;

// [x,y].postln;

query.setn(0, [x,y]);

/*~reduced*/ ~tree.kNearest(query, 10, {|rep|

var id, idx = 0, start, end;

// rep.asInteger.postln;

id = rep.asInteger;

idx = id;

start = ~offsets[idx];

//

end = start + ~durations[idx];

if (rep != oldval) {

idx.postln;

~files[idx].postln;

~fp.highlight = idx;

// ~playslice.(rep.asInteger);

// ~playslice = Synth(\sample, [\id, idx, \start, start, \end, end]);

~pattern = Pbind(\instrument, \sample,

// \id, Pseq(idx, 1),

\start, Pseq(start, 1),

\end, Pseq(end, 1),

\dur, Pseq(~durations[idx]/44100 + 0.1, 1),

).play;

oldval = rep;

}

});

}).pointSizeScale = 0.5;

}.defer;

}

)```

as well as being able to drop in a folder of audio files to fill up a polybuffer~ for the corpus.

as well as being able to drop in a folder of audio files to fill up a polybuffer~ for the corpus.

)

)