I remember looking at this back then and although I don’t remember the specifics, I remember not finding it useful for what I was doing.

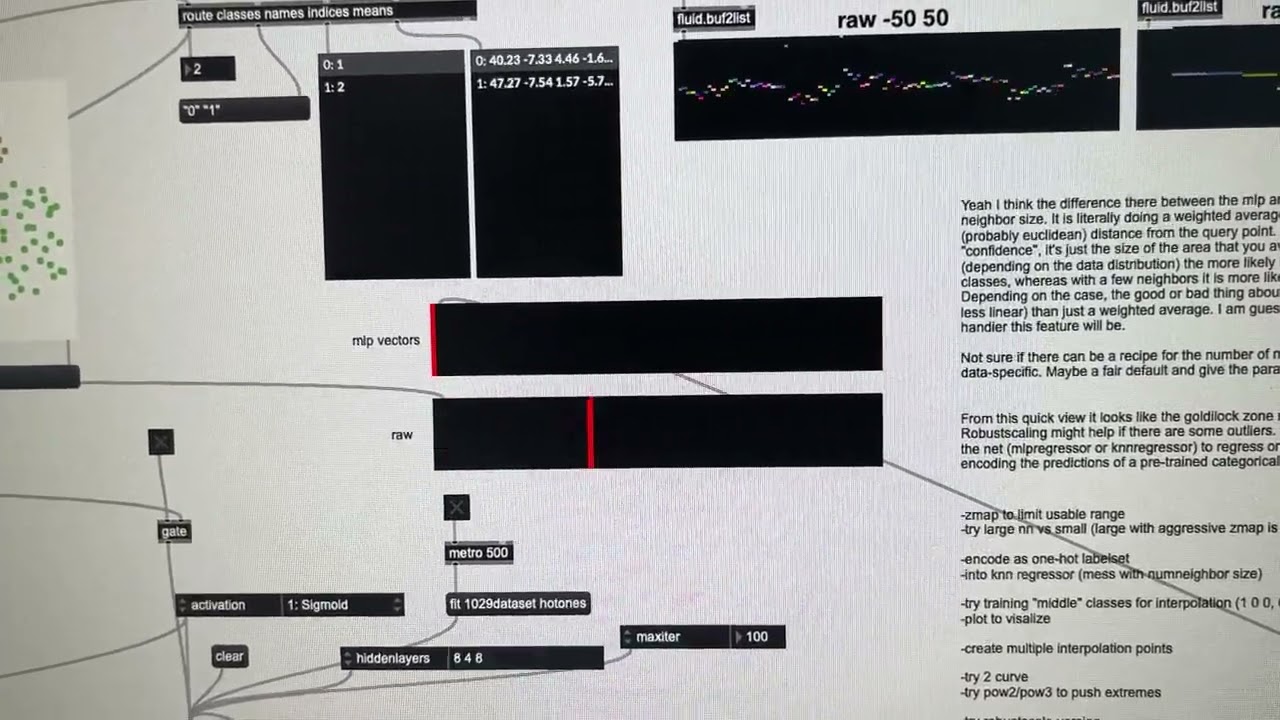

So I loaded it up into the current patch with the current sounds encoded just as it was in your patch, using a dataset/labelset (as opposed to the mlp example above that is dataset/dataset so it has the “one-hot” vectors). And I get this:

(in effect, the mlp-based stuff doesn’t seem to be able to actually communicate confidence (other than flip-flopping between 100% this or 100% that))

When I did a ton of testing with this stuff in the past (LTEp) I found that this worked well for having a visual representation/spread for something where you mouse/browse around ala CataRT, but found that it didn’t work with classification nearly as well. I never tried using just purely spectral moments this, so that’s my next course of action.

My thinking, though, is that the “one-hot” approach is only as good as the core classification that can happen with it. e.g. if I don’t get good solid separation between the classes with the descriptors/recipe, it likely won’t work well for this at all.

So there’s some refinement here still I think(/hope) in terms of optimizing the descriptor recipe, but also curious if there’s anything else that can be done with regards to how interpolation/confidence is computed here. (e.g. mlp not working well and flip-flopping, numneighbours being one of the biggest deciding factors, etc…).

I do look forward to experimenting with this as in my case I just have 7 frames of analysis anyways, so I imagine I could just chuck all 7 frames in, rather than doing statistics on them and this would only slightly increase my dimension count while at the same time better representing morphology.