Ok, this one is a doozy, and it took me a bit to figure out what is happening (with a happy crash along the way). It looks quite exciting, though sadly it is not easy to test with your own sounds sends specific durations are pre-baked into the 2nd subpatch (and consequently the 3rd subpatch), so I’ll play around more with the “examples soup”.

So in terms of the final subpatch here, you’re essentially building a 12D LPT-kind of thing, with 4D per macro-feature (“loudness”, “pitch”, “timbre”). I like where this is going!

I’m having a little trouble following the dimensionality reduction stuff, and which is being used where.

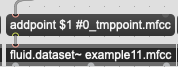

So in 1st major subpatch, the MFCCs are standardized before PCA (I thought PCA liked normalize? either way…). And then in the 3rd subpatch, everything is normalized (including the output of the MFCC->standardize->PCA). Is that correct? It’s a little tricky to follow with all the recursive object usage.

Lastly, the “weighting” bit at the end, which I believe has copy/pasted ‘clue’ text from elsewhere. You’re just rescaling a specific feature inside the normalized space? In this case, making “pitch” go from -4.5 to 4.5, vs everything else being 0. to 1.?