I was looking over my Example 11 thread from last year and other than it taking a while for my to re-wrap my head around all the “indices math” involved, I was struck by this workflow:

Or @tremblap’s pseudocode version:

More specifically, how much of this is applicable if rather than PCA-ing together a descriptor space, you instead manually curate a set of descriptors/stats that you use “as is” (either using something like what @tedmoore has suggested with an SVM or more recently with @tremblap’s suggestion of parsing through PCA to see the weights of given dimensions).

All of that is to say, a lot of the workflow above involves standardizing->pca->pruning->normalizing. Obviously things like loudness-weighted descriptors would still be fantastic, but if I have a fairly low (20-30) dimensional space that is made up of natural dimensions, perhaps some of this workflow isn’t necessary/desired.

At the moment I’ve been trying to build a purely fluid.kdtree~-based version of my matching and it works well if I’m using somewhat overlapping descriptor spaces, or if I would like descriptor spaces to purposefully (and unevenly) overlap. As in, I want to match loudness/pitch even if they aren’t the same in my input and corpus. But if I would like to make the spaces overlap, I know that IQR is the way to go for this. I believe that example 11 predates the inclusion of IQR in the fluid.verse~ but either way, it’s a bit tricky to figure out how to best deal with the datasets/dimensions if you want them to remain absolute (i.e. pitch matches exact pitch).

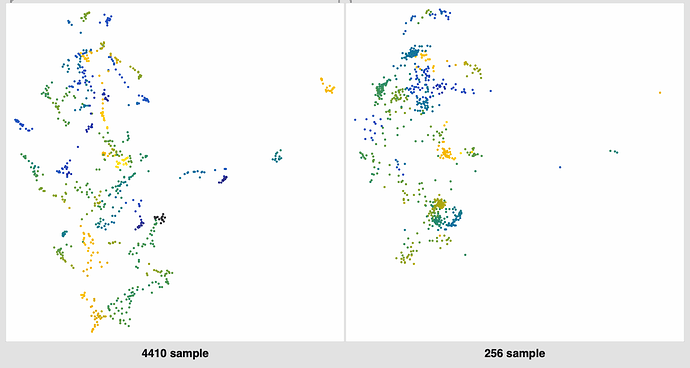

At the moment I’m mainly using the means of 20 (19) MFCCs, loudness, and pitch, as those were the most effective (natural) descriptors in my tests here, and these numbers are pretty all-over-the-place in terms of range. Since I’m analyzing/matching like-for-like I’ve just shoved them as is into fluid.kdtree~ and it seems to work fine, but I’m not entirely sure what I should be doing to improve the distance matching if I want to retain absolute descriptor spaces. And as mentioned above, what to do with IQR-ing things for when I would like to scale/normalize the spaces.

@tremblap mentioned in the thread about fluid.datasetplot~ that @weefuzzy is working on some of this stuff so hopefully this will become clearer on the weekend, but still wanted to make a thread about it here in the interim.