I was reminded today of the oldschool NMF-as-KNN approach I was experimenting with early in the process, and came across this post which shows how effective @filterupdate 1 was at refining the filters and selections.

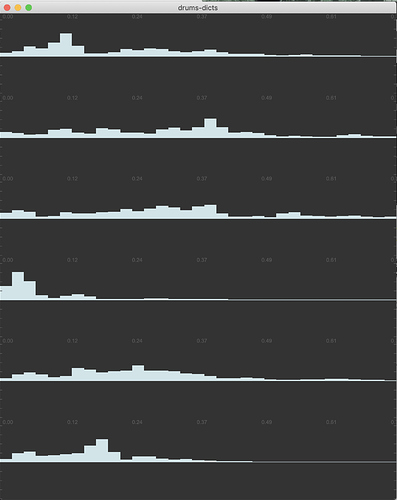

Pre @filterupdate 1:

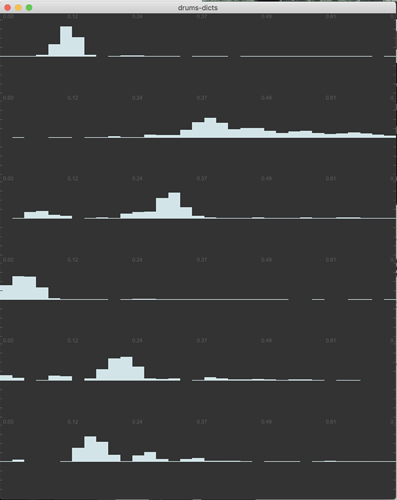

Post @filterupdate 1:

I’m (fairly) certain this is a naive question, which is probably not possible given the algorithms at play, but is there a way to train some points, and then run arbitrary audio through to have it refine the selections based on the input?

Obviously one can add more points to each classification, but perhaps something like this could help catch or fix the edge cases. (Would be amazing if there was a 2d view where the arbitrary audio that it was fed is mapped, and you could go through and say “this point is A, this point is B” and have the network update accordingly.