what is important is that you get discrimination between these 6ms…

So you think missing out in the first few ms would help?

edit:

As in other than taking diff analysis frames, which I’m doing already for spectral stuff.

that is what I found indeed. 2 things you can quickly do:

-

audition the 6ms and see if your hear can spot the diff. if yes, first half or 2nd half alone, same question. that way you can spy on your humanly/musicianly cues

-

just move it for now by a few ms to try until you get 90% - then see if the latency is a problem.

I’ll do some testing and see.

I remember doing some stuff like this for the JIT-MFCC thing, where I could hear a diff between the attacks, but I’ll try again with these samples and in this context.

I’m also using more differentiated sounds. With that other thing I mainly focused on getting differentiation between a strike in the middle and edge, whereas now I have those, rim clicks, side stick, etc… So some of the attacks are very different. Surprisingly, it gets the first two hits perfectly correct (1 = center hit, 2 = edge hit) and those sound the most similar of the bunch.

Initial tests.

On the left is the amount of offset (and added latency) and on the right is the matching percent:

128samples = 10%

110samples = 60%

90samples = 70%

80samples = 60%

75samples = 80%

70samples = 60%

64samples = 60%

45samples = 60%

20samples = 50%

0samples = 50%

I started with 90 and 45 and then worked out in different directions seeing if I can get the highest number. For some reason 75 is a sweet spot there, which is weird because it tapers off quickly on either end of that, so I wonder what’s happening there.

I also wanted to see what kind of results I got if I only took stats for the middle frames of everything. Up to now I’ve been taking all the loudness-based frames, but only @startframe 3 @numframes 3 (out of 7) for the spectral frames. I did @startframe 3 @numframes 3 for all descriptors with no offset of any kind and I got the same exact results as I initially did. 50%.

I’ll try an mfcc+stats approach, like in the JIT-MFCC example and see if that works better (without any additional latency).

Hah, came up with a really stupid solution…

Since the main problem with the training set vs real-time analysis is the fact that it’s going through an onset detection algorithm… I can just equalize that part of the process.

I created a fluid.dataset~ by manually playing the training examples through the same onset detection algorithm, so that when I do the same in the matching process, they should better line up in terms of phase/jitter.

And guess what… it works 100% of the time.

This kind of complicates the batch processing since it will involve doing some buf-based analysis along with some “offline real-time” analysis, but the results are great!

Actually, I wonder if I can just “simulate” this by running the offline analysis through fluid.bufampslice~ and taking whatever offset it gives as the startframe for the analysis.

@tremblap are the buf and realtime versions of ...ampslice~ exactly the same? As in, would this theoretically give me the same exact results?

(also brainstorming about the efficacy of doing this for all my offline analysis that is going to be later matched by an onset detection algorithm, as a way of “pre-computing” the offset fuckery generated by the real-time process/algorithm)

excactly to the point of if you find a difference, let us know: it is a bug ![]()

Ok, I’ll try adding fluid.bufampslice~ (with the same settings) to my processing chain and see if I get the same results.

Ok, my results are not exactly the same. I get 80% matching doing the offline version. This is using the same exact settings for both versions of ...ampslice~:

@highpassfreq 2000 @floor -55 @fastrampup 3 @fastrampdown 383 @slowrampup 2205 @slowrampdown 2205 @minslicelength 4410 @onthreshold 11 @offthreshold 5

Don’t know if that would be due to play~ interpolation, or some minor variance in something else.

The offsets, as per fluid.bufampslice~ range from 1-10samples, most are 2, a couple of 4s, and a single 10 as the outlier.

I pulled out the individual numbers, are they are all really close… except the stats for centroid.

Here are the two that don’t match now. The first of each row is the training set version (what is literally in fluid.dataset~ / fluid.kdtree~), and the second is what I’m getting from my realtime analysis.

4, -32.822884 -0.552598 2.078115 102.036583 -307.383789 41.871384 -17.910528 -2.084873 0.035767 113.151128 -738.506348 74.693971;

4, -32.846924 -0.566004 2.042863 104.02221 164.844116 66.668712 -13.322609 3.108418 1.62241 113.14137 -678.789307 76.277588;

10, -33.834335 -1.797346 2.990507 109.284359 185.458252 60.646163 -8.269015 1.910878 0.990904 116.711477 -769.298584 43.519417;

10, -33.924625 -1.846144 3.058752 107.875329 -269.849854 35.348694 -10.028451 -0.327468 0.406182 116.697243 -943.24292 -7.627497;

The entries, from left to right are:

loudness_mean

loudness_derivative

loudness_deviation

centroid_mean

centroid_derivative

centroid_deviation

flatness_mean

flatness_derivative

flatness_deviation

rolloff_max

rolloff_derivative

rolloff_deviation

So the centroid_derivative and centroid_deviation are wildly different for both. Some more spectralshape stats for entry 10 aren’t great either.

I tried removing the centroid stats from the fluid.dataset~ and real-time analysis and my matching goes back up to 90% (entry 10 still doesn’t match right, due to those other funky stats).

Double-checked my stats/frame analysis and everything looks the same. I guess derivatives and deviations are more susceptible to a difference in starting samples(?!)?.

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

I also need to streamline things a bit, as at the moment I’m using fluid.ampslice~ + fluid.bufcompose~ to create files which I then analyze in a separate patch. So those same audio files end up going through fluid.ampslice~ again, before getting to the analysis stuff.

So rather than replicating that by adding double-onset detection to my training set, I just need to consolidate things so the training set analysis runs off an onset detection algorithm in the same exact way as the real-time version will run.

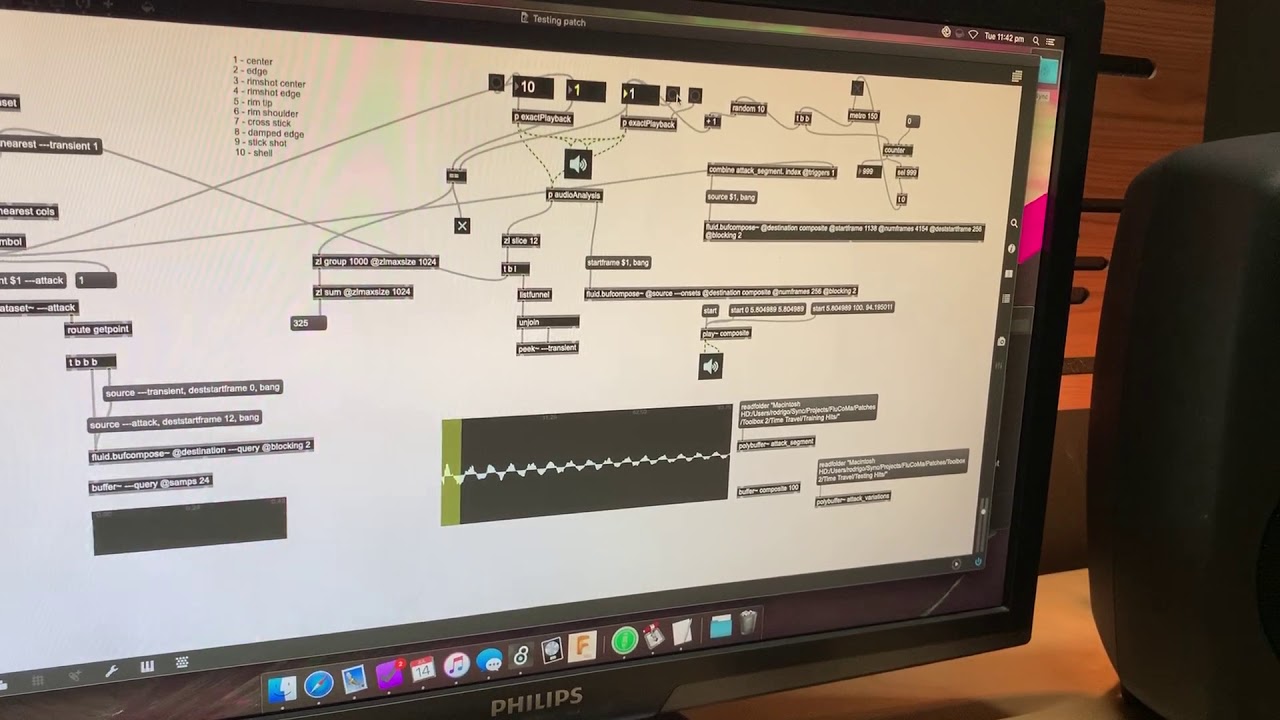

I just adapted my batch analysis patch for this purposes rather than building a new thing from scratch.

I’ve gone back and created a training set with 20ms padding at the start, so the onset detection for the offline and real-time version have some lead in before they fire, and should hopefully do so at the same exact time.

This gives me 100% accuracy (again), and lets me have individual files for testing/training/refining.

Now that this part of the patch is working, I need to make an training set with named hits, and try “real world” variations to see how well that tracks (i.e. having a training point that is “center of snare”, and then play a new version of that, to see if it identifies it).

And for my last trick today, I built this. Created another training set with specific attack types for each and tested things. Get 100% matching when running the training set back into itself.

I also created a testing set of samples, where I played 5 variations of each attack type and fed that in. The accuracy here varies greatly between the type of attack where some are more easily distinguishable than others but after running it for 1000 random examples I got an overall accuracy of 32.5%. So fairly dogshit. As I said, it varies for attack type, so some of these errors actually sound like each other, but I’m going to take this as a number to try and optimize with this training and testing sets of samples, to then generalize out from there.

I’ll try doing an MFCC-based version of this next and see if that fares any better, then perhaps try some of that macro-descriptor dimensionality reduction stuff.

I think you misunderstood what I said: if you listen to the bit that is analysed only (which you can’t be now since I hear a lot more than 256 samples) that should give you an intuition of how it analyses. For instance, what you play at 1:10 it is quite very similar by ear… so it means your actual data is not discriminated enough if you see what I mean?

In other words, you need to listen to only what you ask the machine listens. If that is not clearly segregated by way of description and/or listening, you won’t get anything better further down the line.

Not sure I follow.

The analysis/matching happens only on the 256 samples, the 100ms version we are hearing is, from the input data, the entire “real” 100ms, and from the matching version, the composited “predicted” version.

I can listen more to the initial snippet, and matched snippet to see what I’m comparing against (I think this is what you’re saying?).

Those sounds are similar, in that particular example, but different enough that I think the descriptors were able to differentiate.

Today I’m going to try the MFCCs+stats route, as that got me like 80% accuracy with hits as similar sounding as the center and edge of the snare (hits 1 and 2 in this video). So hoping that works better.

if you only use 256 samples, this is what you should listen to. If your ears cannot clearly discriminate, and if your descriptors give you false nearest neighbours, then you have a problem you need to solve. nothing will be good out of that if that is wrongly classified.

if mfccs are better than your ear on 256 samples to match, then there is hope there. don’t forget that the classification of the 1st item can be a datapoint in a second dataset…

I’ll set it up so I can listen to the initial clips of both. I’ll still feed 100ms into the real-time analysis, so it’s not missing any frames at the end if the onset detection fires early (or something). But basically have a bit where I can A/B the 256 sample nuggets.

None of these are classes as such, I just have only 10 entries in the KDTree, so it’s finding the nearest one by default. In the end I’m going to have a wide variety of loads of different entries where the exact match isn’t as important as having a rough idea of the kind of morphology of the sound (even if “wrong”). As in, if the bit it matches can plausibly go to another sample, that’s, perceptually, ok.

I then plan on weighting things so the initial bit matters more in the query, and the predicted bit will weigh less (not presently (easily) possible).

Once I get that far I’ll do some further A/B comparison matching only with the 256 samples, and then matching with 4410 samples (most of it being predicted). To see what sounds better (or more nuanced (or more interesting)).

After some growing pains in adapting the patch, I got it working with MFCCs. With no other fine tuning and literally dropping in the same mfccs/stats from the JIT-MFCC patch and it’s already worlds more robust.

I get 67.05% accuracy out of the gate (out of a sample of 2000 tests), which is more than twice the improvement.

Speed has gone down some though. It was around 0.58ms or so when running a KDTree with 12 dimensions in it, and up to 1.5ms with the 96dimensional mfcc/stats thing.

I haven’t optimized anything, so it’s possible I’m messy in places with how I’m going from data types. I do remember it being faster than this than in the JIT-MFCC patch in context though, but I’ll worry about that later.

edit:

Went and compared the original JIT-MFCC patch, and it is as “slow” as this, coming in at 1.5ms per query. I guess that seemed fast at the time all things considered.

What I want to try next is taking more stats/descriptors and trying some fluid.mds~ on it, to bring it down to a manageable amount. For the purposes of what I’m trying to do here, I think not including a loudness descriptor is probably good, since I wouldn’t have to worry about the limitations of variations in loudness for my initial training set.

The only thing I’m concerned about (which I’ll do some testing for) is the difference in speed in querying a larger dimensional space, vs fit-ting a dimensionality reduction scheme quickly in real-time. Like is the latter (shrinking dimensions on real-time data from a pre-computed fit) faster than just querying the larger dimensional space in the first place…

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

So in effect, my “real world” 0-256 analysis would include:

- (whatever stats work best for actual corpus querying(?))

- (loudness-related analysis for loudness compensation)

- (40melbands for spectral compensation)

- (buttloads of mfccs/stats for kdtree prediction) <---- focus of this thread

So I would use the mfccs/stats to query a fluid.kdtree~, and then pull up the actual descriptors/stats that are good for querying from the relevant fluid.dataset~ to create a composite search entry that may or may not include the mfcc soup itself…

I returned to this testing today, with the realization that I can’t have > 9 dimensions (with my current training set) due to how PCA works.

I’ve changed up the analysis now too and am taking 25 MFCCs (and leaving out the 0th coefficient), as well as two derivatives. So an initial fluid.dataset~ with 196 dimensions in it. So a nice chunky one…

I tested seeing how small I can get things and have OK matching.

196d → 2d = 28.9%

196d → 5d = 41.9%

196d → 8d = 44.1%

196d → 9d = 54.0%

Not quite the 67.05% I was getting with a 12d reduction (which is surprising as a couple of those dimensions would be dogshit based on the pseudo-bug of requesting more dimensions than points).

I also made another training set with 120 points in it, but I can’t as easily verify the validity of the matching since it’s essentially a whole set of different sounding attacks on the snare. So I’ll go back and create a new set of training and testing data where I have something like 20 discrete hits in it, so I can test PCA going up to that many dimensions.

I’ll also investigate to see what I did to get that 67% accuracy above, to see if I build off that. But having more MFCCs and derivs seems promising at the moment, particularly if I can squeeze the shit out of it with PCA.

edit:

It turns out I got 67.05% when running the matching with no dimensionality reduction at all. If I do the same with the 196d variant (25MFCC + 2derivs) I get 73.9% matching out of the gate. So sans reduction, this is working better.

Well, at least without so much reduction. If you had more fitting data to try, you might find a sweet spot with a less drastic amount of reduction where the PCA is removing redundancy / noise but not stuff that’s useful for discrimination.

Yeah that’s what I’m aiming to do at the moment. I made a list of 30 repeatable sounds (as in, mallet in center, needle on rim, etc…), so I can train it on that, then I’ll create a larger testing set with 5 of each hit and run the same kinds of tests, to see if I get better results going up to 30d (if so, I’ll try higher).

Also going to isolate and test the raw matching power (unreduced) of 20mfccs 1deriv, 25mfccs 1 deriv, 20mfccs 2deriv, 25mfcc 2derivs, to see what along the way made it better, as to not fill it with more shit if it’s not helpful.