And today’s experiments have been with dimensionality reduction, and using a larger training/testing data set.

Off the bat, I was surprised to discover that my overall matching accuracy went down with the larger training set. I also noticed that some of the hits (soft mallet) failed to trigger the onset detection algorithm for the comparisons, so after 1000 testing hits, I’d often only end up with like 960 tests, so I would just “top it up” until I got the right amount. So it’s possible that skewed the data a little bit, but this was consistent across the board. If nothing else, this should serve as a useful relative measure of accuracy between all the variables below.

I should mention at this point that even though the numerical accuracy has gone down, if I check and listen to the composite sounds it assembles, they are plausible, which is functionally the most important thing here.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

So I did just some vanilla matching as above, but with the larger training set, just to get a baseline. That gave me this:

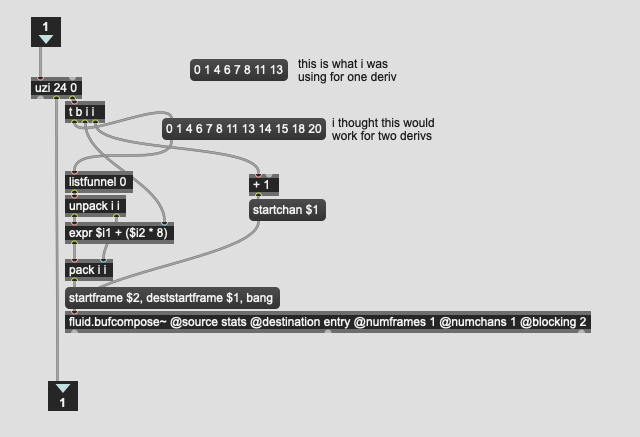

20MFCCs with 1 derivative: 44.1% / 45.5% / 46.5% = 45.37% (152d)

20MFCCs with 2 derivatives: 42.5% / 47.1% / 44.0% = 44.53% (228d)

(also including the amount of dimensions it takes to get this accuracy at the end)

I also re-ran what gave me good (but not the best) results by only taking the mean and standard deviation (whereas the above one also includes min and max).

That gives me this:

20MFCCs with 0 derivatives: 52.2% / 53.0% / 53.5% = 52.90% (38d)

20MFCCs with 1 derivatives: 54.5 / 53.0% / 52.5% = 53.33% (76d)

What’s interesting here is that for the raw matching power (sans dimensionality reduction), I actually get better results with only the mean and std. Before this was close, but the larger amount of statistics and dimensions were better.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

What I did next was test these variations with different amount of PCA-ification. This can go a lot of different ways, so I compared all of them with heavy reduction (8d) and medium reduction (20d) to see how they faired, relatively. (granted there are different amounts of dimensions to start with, but I wanted to get a semi-even comparison, and given my training set, I can only go up to 33d anyways).

As before, here are the versions that take four stats per derivative (mean, std, min, max):

20MFCCs with 1 derivative: 22.5% (8d)

20MFCCs with 1 derivative: 23.1% (20d)

20MFCCs with 2 derivatives: 26.5% (8d)

20MFCCs with 2 derivatives: 27.7 (20d)

I then compared the versions with only mean and std:

20MFCCs with 0 derivatives: 28.5% (8d)

20MFCCs with 0 derivatives: 26.2% (20d)

20MFCCs with 1 derivative: 25.5% (8d)

20MFCCs with 1 derivative: 23.0% (20d)

20MFCCs with 1 derivative: 30.0% (33d)

Even in a best case scenario, of going from 38d down to 20d, I get a pretty significant drop in accuracy (52.9% to 26.2%).

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

So with all of this in mind, I get the best overall accuracy while taking speed into consideration is taking only mean and std of 20MFCCs with zero derivatives which gives me 72.0% with the smaller data set and 52.90% with the larger data set, with only 38 dimensions.

I was hoping to see if I could smoosh that a little, but it appears that the accuracy suffers greatly. I wonder if this says more about my tiny analysis windows, the sound sources + descriptors used, or the PCA algorithm in general.

My take away with all of this is to get the best accuracy (if that’s important) with the lowest amount of dimensions possible, on the front end, rather than gobbling up everything and hoping dimensionality reduction (PCA at least) will make sense of it for you.

For a use case where it’s more about refactoring data (i.e. for a 2d plot, or a navigable space ala what @spluta is doing with his joystick), then it doesn’t matter as much, but for straight up point-for-point accuracy, the reduction stuff shits the bed.

(if/when we get other algorithms that let you transformpoint I’ll test those out, and same goes for fluid.mlpregressor~ if it becomes significantly faster, but for now, I will take my data how I take my chicken… raw and uncooked)

, though happy to test some other permutations or insights they might have.

, though happy to test some other permutations or insights they might have.