I’ve been thinking about envelopes and morphology a bunch lately. For one, trying to generate more temporal/morphological information from onsets and short attacks, but also how to better capture or analyze that information.

A while back I was looking at linear regression as a better summary statistic, which I quite like, particularly since the formula also spits out an r2 value, which seems to indicate a kind of confidence of the slope. So in that I feel like it gives you a derivative-esque measure of contour, but also how much that is the case.

Either way, these are summary statistics.

What I’m thinking about, and asking here, is if there’s a way to represent the envelope or contour that 1) can be some kind of summary and 2) can be queried in a variety of states.

By 1) I mean, not having the information for every single frame. For a low number of analysis frames, that’s fine, but if it’s a longer sample, having hundreds (or more) of frames, per sample, starts getting unwieldy.

By 2) I mean being able to query based on transformations of that contour.

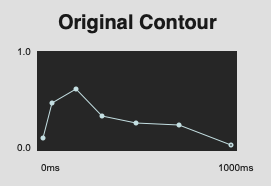

Here’s some visual reference as to what I mean.

Say I have some kind of contour (be it loudness, centroid, whatever) that looks like this:

So a way to represent that that isn’t a list of 7 numbers (or 7 x/y pairs more likely), for both efficiency, but also to have it to be robust to transformations.

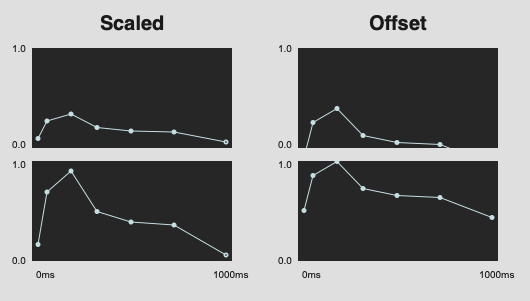

For example, if I wanted to have this contour match highly things that look like this:

So the individual values would be different, but the overall gesture or contour is “the same”. For the offset stuff, it would be easy to just store delta values, and then it doesn’t matter where in the overall space it falls. I guess it’s more complicated for scaled versions.

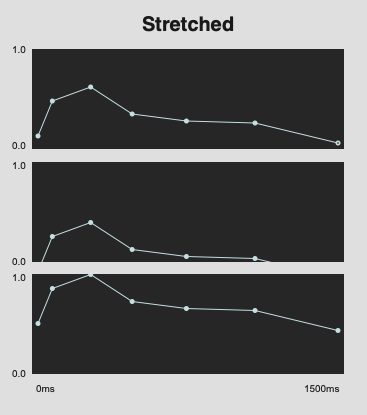

But what I find more interesting here is to have this be applicable to difference scales of time:

Here, I’ve literally just dragged function to make it appear longer, but this would potentially be the same kind of contour but made up of a different amount of individual points.

The overall idea here would be that I can have any given contour/envelope and query via that as an initial way to cut down on the overall corpus/dataset, and then match for specific ranges/values after that. This way I could match via contour or gesture in a manner that is divorced from duration and temporality. A way to side-step my “short analysis window and long samples to playback” problem.

Metaphor wise, it also makes sense to me in a vector vs raster way, where I’d like to have vector representations of the contours, which can be queried with, that is divorced from the per-pixel/per-sample counterparts.

Is this a thing?